mirror of

https://github.com/open-compass/opencompass.git

synced 2025-05-30 16:03:24 +08:00

Update README.md (#531)

This commit is contained in:

parent

8cdd9f8e16

commit

b562a13619

12

README.md

12

README.md

@ -42,7 +42,7 @@ Just like a compass guides us on our journey, OpenCompass will guide you through

|

||||

- **\[2023.09.06\]** [**Baichuan2**](https://github.com/baichuan-inc/Baichuan2) team adpots OpenCompass to evaluate their models systematically. We deeply appreciate the community's dedication to transparency and reproducibility in LLM evaluation.

|

||||

- **\[2023.09.02\]** We have supported the evaluation of [Qwen-VL](https://github.com/QwenLM/Qwen-VL) in OpenCompass.

|

||||

- **\[2023.08.25\]** [**TigerBot**](https://github.com/TigerResearch/TigerBot) team adpots OpenCompass to evaluate their models systematically. We deeply appreciate the community's dedication to transparency and reproducibility in LLM evaluation.

|

||||

- **\[2023.08.21\]** [**Lagent**](https://github.com/InternLM/lagent) has been released, which is a lightweight framework for building LLM-based agents. We are working with Lagent team to support the evaluation of general tool-use capability, stay tuned!

|

||||

- **\[2023.08.21\]** [**Lagent**](https://github.com/InternLM/lagent) has been released, which is a lightweight framework for building LLM-based agents. We are working with the Lagent team to support the evaluation of general tool-use capability, stay tuned!

|

||||

|

||||

> [More](docs/en/notes/news.md)

|

||||

|

||||

@ -50,13 +50,13 @@ Just like a compass guides us on our journey, OpenCompass will guide you through

|

||||

|

||||

|

||||

|

||||

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features includes:

|

||||

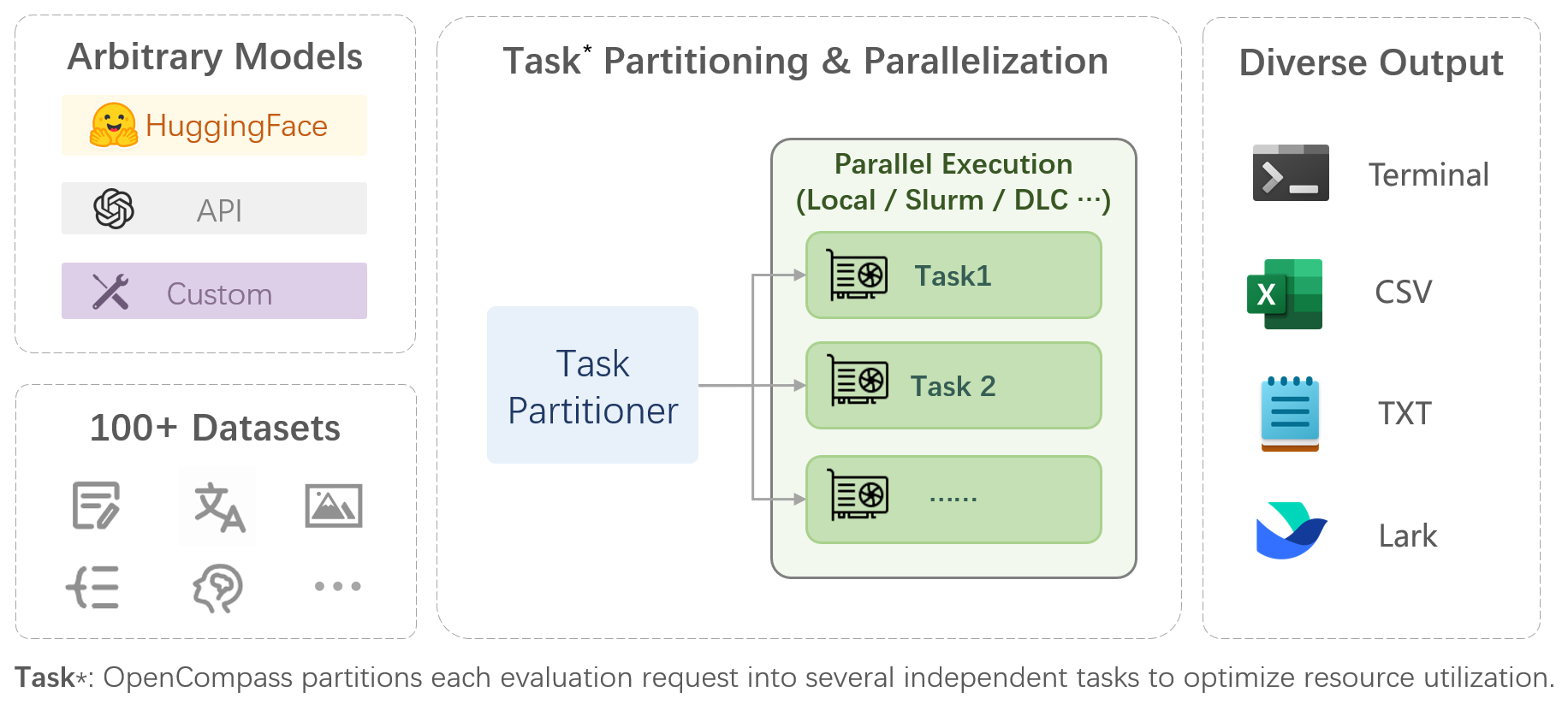

OpenCompass is a one-stop platform for large model evaluation, aiming to provide a fair, open, and reproducible benchmark for large model evaluation. Its main features include:

|

||||

|

||||

- **Comprehensive support for models and datasets**: Pre-support for 20+ HuggingFace and API models, a model evaluation scheme of 70+ datasets with about 400,000 questions, comprehensively evaluating the capabilities of the models in five dimensions.

|

||||

|

||||

- **Efficient distributed evaluation**: One line command to implement task division and distributed evaluation, completing the full evaluation of billion-scale models in just a few hours.

|

||||

|

||||

- **Diversified evaluation paradigms**: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue type prompt templates, to easily stimulate the maximum performance of various models.

|

||||

- **Diversified evaluation paradigms**: Support for zero-shot, few-shot, and chain-of-thought evaluations, combined with standard or dialogue-type prompt templates, to easily stimulate the maximum performance of various models.

|

||||

|

||||

- **Modular design with high extensibility**: Want to add new models or datasets, customize an advanced task division strategy, or even support a new cluster management system? Everything about OpenCompass can be easily expanded!

|

||||

|

||||

@ -64,7 +64,7 @@ OpenCompass is a one-stop platform for large model evaluation, aiming to provide

|

||||

|

||||

## 📊 Leaderboard

|

||||

|

||||

We provide [OpenCompass Leaderbaord](https://opencompass.org.cn/rank) for community to rank all public models and API models. If you would like to join the evaluation, please provide the model repository URL or a standard API interface to the email address `opencompass@pjlab.org.cn`.

|

||||

We provide [OpenCompass Leaderbaord](https://opencompass.org.cn/rank) for the community to rank all public models and API models. If you would like to join the evaluation, please provide the model repository URL or a standard API interface to the email address `opencompass@pjlab.org.cn`.

|

||||

|

||||

<p align="right"><a href="#top">🔝Back to top</a></p>

|

||||

|

||||

@ -442,7 +442,7 @@ Through the command line or configuration files, OpenCompass also supports evalu

|

||||

- [ ] Long-context evaluation with extensive datasets.

|

||||

- [ ] Long-context leaderboard.

|

||||

- [ ] Coding

|

||||

- [ ] Coding evaluation leaderdboard.

|

||||

- [ ] Coding evaluation leaderboard.

|

||||

- [ ] Non-python language evaluation service.

|

||||

- [ ] Agent

|

||||

- [ ] Support various agenet framework.

|

||||

@ -452,7 +452,7 @@ Through the command line or configuration files, OpenCompass also supports evalu

|

||||

|

||||

## 👷♂️ Contributing

|

||||

|

||||

We appreciate all contributions to improve OpenCompass. Please refer to the [contributing guideline](https://opencompass.readthedocs.io/en/latest/notes/contribution_guide.html) for the best practice.

|

||||

We appreciate all contributions to improving OpenCompass. Please refer to the [contributing guideline](https://opencompass.readthedocs.io/en/latest/notes/contribution_guide.html) for the best practice.

|

||||

|

||||

## 🤝 Acknowledgements

|

||||

|

||||

|

||||

Loading…

Reference in New Issue

Block a user