mirror of

https://github.com/open-compass/opencompass.git

synced 2025-05-30 16:03:24 +08:00

Merge branch 'open-compass:main' into main

This commit is contained in:

commit

97c1531ed4

@ -22,7 +22,7 @@ with read_base():

|

||||

from opencompass.configs.datasets.gpqa.gpqa_openai_simple_evals_gen_5aeece import \

|

||||

gpqa_datasets # noqa: F401, E501

|

||||

# new datasets in Fullbench v1.1

|

||||

from opencompass.configs.datasets.gsm8k.gsm8k_0shot_v2_gen_a58960 import \

|

||||

from opencompass.configs.datasets.gsm8k.gsm8k_0shot_v2_gen_6e39a4 import \

|

||||

gsm8k_datasets # noqa: F401, E501

|

||||

from opencompass.configs.datasets.hellaswag.hellaswag_10shot_gen_e42710 import \

|

||||

hellaswag_datasets # noqa: F401, E501

|

||||

@ -46,7 +46,7 @@ with read_base():

|

||||

mmlu_pro_datasets # noqa: F401, E501

|

||||

from opencompass.configs.datasets.mmmlu_lite.mmmlu_lite_gen_c51a84 import \

|

||||

mmmlu_lite_datasets # noqa: F401, E501

|

||||

from opencompass.configs.datasets.musr.musr_gen_3c6e15 import \

|

||||

from opencompass.configs.datasets.musr.musr_gen_3622bb import \

|

||||

musr_datasets # noqa: F401, E501

|

||||

from opencompass.configs.datasets.nq.nq_open_1shot_gen_2e45e5 import \

|

||||

nq_datasets # noqa: F401, E501

|

||||

|

||||

@ -70,7 +70,7 @@ internlm2_5-7b-chat-turbomind_fullbench:

|

||||

drop: 75

|

||||

hellaswag: 81.25

|

||||

TheoremQA: 6.25

|

||||

musr_average: 39.58

|

||||

musr_average: 37.5

|

||||

gsm8k: 68.75

|

||||

math: 75

|

||||

GPQA_diamond: 25

|

||||

|

||||

@ -1,2 +1,3 @@

|

||||

recursive-include opencompass/configs *.py *.yml *.json *.txt *.md

|

||||

recursive-include opencompass/openicl/icl_evaluator/hf_metrics *.py

|

||||

recursive-include opencompass/datasets *.py *.yml *.json *.txt *.md *.yaml

|

||||

|

||||

@ -15,13 +15,19 @@ datasets = [

|

||||

|

||||

models = [

|

||||

dict(

|

||||

path='Bailing-Lite-0830',

|

||||

path='Bailing-Lite-1116',

|

||||

token='xxxxxx', # set your key here or in environment variable BAILING_API_KEY

|

||||

url='https://bailingchat.alipay.com/chat/completions',

|

||||

type=BailingAPI,

|

||||

generation_kwargs={},

|

||||

query_per_second=1,

|

||||

max_seq_len=4096,

|

||||

max_out_len=11264,

|

||||

batch_size=1,

|

||||

generation_kwargs={

|

||||

'temperature': 0.01,

|

||||

'top_p': 1.0,

|

||||

'top_k': -1,

|

||||

'n': 1,

|

||||

'logprobs': 1,

|

||||

},

|

||||

),

|

||||

]

|

||||

|

||||

|

||||

28

configs/datasets/ruler/ruler_64k_gen.py

Normal file

28

configs/datasets/ruler/ruler_64k_gen.py

Normal file

@ -0,0 +1,28 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .ruler_cwe_gen import cwe_datasets as cwe # CWE

|

||||

from .ruler_fwe_gen import fwe_datasets as fwe # FWE

|

||||

from .ruler_niah_gen import niah_datasets as niah # Niah

|

||||

from .ruler_qa_gen import qa_datasets as qa # QA

|

||||

from .ruler_vt_gen import vt_datasets as vt # VT

|

||||

|

||||

|

||||

import_ds = sum((cwe, fwe, niah, qa, vt), [])

|

||||

|

||||

# Evaluation config

|

||||

NUM_SAMPLES = 100 # Change to the number of samples you need

|

||||

# Change the context lengths to be tested

|

||||

max_seq_lens = [1024 * 64]

|

||||

abbr_suffixs: list[str] = ['64k']

|

||||

|

||||

ruler_datasets = []

|

||||

|

||||

# Different seq length

|

||||

for max_seq_len, abbr_suffix in zip(max_seq_lens, abbr_suffixs):

|

||||

for dataset in import_ds:

|

||||

tmp_dataset = dataset.deepcopy()

|

||||

tmp_dataset['abbr'] = tmp_dataset['abbr'] + '_' + abbr_suffix

|

||||

tmp_dataset['num_samples'] = NUM_SAMPLES

|

||||

tmp_dataset['max_seq_length'] = max_seq_len

|

||||

ruler_datasets.append(tmp_dataset)

|

||||

@ -6,6 +6,7 @@ with read_base():

|

||||

from .ruler_8k_gen import ruler_datasets as ruler_8k_ds

|

||||

from .ruler_16k_gen import ruler_datasets as ruler_16k_ds

|

||||

from .ruler_32k_gen import ruler_datasets as ruler_32k_ds

|

||||

from .ruler_64k_gen import ruler_datasets as ruler_64k_ds

|

||||

from .ruler_128k_gen import ruler_datasets as ruler_128k_ds

|

||||

|

||||

ruler_combined_datasets = sum((v for k, v in locals().items() if k.endswith('_ds')), [])

|

||||

|

||||

@ -118,7 +118,7 @@ for _name, _prompt in sub_map.items():

|

||||

]),

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer, max_seq_len=4096, max_out_len=2048),

|

||||

inferencer=dict(type=GenInferencer, max_seq_len=4096, max_out_len=4096),

|

||||

)

|

||||

|

||||

subjective_eval_cfg = dict(

|

||||

|

||||

@ -47,8 +47,3 @@ for _name in subjective_all_sets:

|

||||

infer_cfg=subjective_infer_cfg,

|

||||

eval_cfg=subjective_eval_cfg,

|

||||

))

|

||||

# ds1000_eval_cfg = dict(

|

||||

# evaluator=dict(type=DS1000Evaluator),

|

||||

# pred_role='BOT',

|

||||

# pred_postprocessor=dict(type=ds1000_postprocess),

|

||||

# )

|

||||

|

||||

32

configs/eval_PMMEval.py

Executable file

32

configs/eval_PMMEval.py

Executable file

@ -0,0 +1,32 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

from opencompass.models import HuggingFacewithChatTemplate

|

||||

|

||||

|

||||

with read_base():

|

||||

from opencompass.configs.models.qwen2_5.lmdeploy_qwen2_5_7b_instruct import models

|

||||

|

||||

# from opencompass.configs.datasets.PMMEval.flores_gen import PMMEval_flores_datasets

|

||||

# from opencompass.configs.datasets.PMMEval.humanevalxl_gen import PMMEval_HumanEvalXL_datasets

|

||||

# from opencompass.configs.datasets.PMMEval.mgsm_gen import PMMEval_MGSM_datasets

|

||||

# from opencompass.configs.datasets.PMMEval.mhellaswag_gen import PMMEval_MHellaswag_datasets

|

||||

# from opencompass.configs.datasets.PMMEval.mifeval_gen import PMMEval_MIFEval_datasets

|

||||

# from opencompass.configs.datasets.PMMEval.mlogiqa_gen import PMMEval_MLogiQA_datasets

|

||||

# from opencompass.configs.datasets.PMMEval.mmmlu_gen import PMMEval_MMMLU_datasets

|

||||

# from opencompass.configs.datasets.PMMEval.xnli import PMMEval_XNLI_datasets

|

||||

|

||||

from opencompass.configs.datasets.PMMEval.pmmeval_gen import PMMEval_datasets

|

||||

|

||||

from opencompass.configs.summarizers.PMMEval import summarizer

|

||||

|

||||

|

||||

# datasets = PMMEval_flores_datasets

|

||||

# datasets = PMMEval_HumanEvalXL_datasets

|

||||

# datasets = PMMEval_MGSM_datasets

|

||||

# datasets = PMMEval_MHellaswag_datasets

|

||||

# datasets = PMMEval_MIFEval_datasets

|

||||

# datasets = PMMEval_MLogiQA_datasets

|

||||

# datasets = PMMEval_MMMLU_datasets

|

||||

# datasets = PMMEval_XNLI_datasets

|

||||

|

||||

datasets = PMMEval_datasets

|

||||

9

configs/eval_korbench.py

Normal file

9

configs/eval_korbench.py

Normal file

@ -0,0 +1,9 @@

|

||||

from mmengine import read_base

|

||||

|

||||

with read_base():

|

||||

from opencompass.configs.datasets.korbench.korbench_single_0_shot_gen import korbench_0shot_single_datasets as zero_shot_datasets

|

||||

from opencompass.configs.datasets.korbench.korbench_single_3_shot_gen import korbench_3shot_single_datasets as three_shot_datasets

|

||||

from opencompass.configs.datasets.korbench.korbench_mixed_gen_d00bdd import korbench_mixed_datasets as mixed_datasets

|

||||

from opencompass.configs.models.hf_internlm.hf_internlm2_5_7b import models as hf_internlm2_5_7b

|

||||

datasets = zero_shot_datasets + three_shot_datasets + mixed_datasets

|

||||

models = hf_internlm2_5_7b

|

||||

47

configs/eval_math_llm_judge_internal.py

Normal file

47

configs/eval_math_llm_judge_internal.py

Normal file

@ -0,0 +1,47 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from opencompass.configs.datasets.math.math_0shot_llm_judge_v2_gen_31d777 import math_datasets

|

||||

|

||||

# 选择一个感兴趣的模型

|

||||

from opencompass.configs.models.qwen2_5.lmdeploy_qwen2_5_72b_instruct import models as qwen2_5_72b_instruct_model

|

||||

|

||||

eval_model_name = 'eval_model_name'

|

||||

postprocessor_model_name = 'postprocessor_model_name'

|

||||

eval_model_urls = ['http://0.0.0.0:23333/v1']

|

||||

postprocessor_model_urls = ['http://0.0.0.0:23333/v1']

|

||||

|

||||

datasets = sum([v for k, v in locals().items() if k.endswith('_datasets')], [])

|

||||

models = sum([v for k, v in locals().items() if k.endswith('_model')], [])

|

||||

|

||||

|

||||

for dataset in datasets:

|

||||

dataset['eval_cfg']['evaluator']['model_name'] = eval_model_name

|

||||

dataset['eval_cfg']['evaluator']['url'] = eval_model_urls

|

||||

dataset['eval_cfg']['evaluator']['post_url'] = postprocessor_model_urls

|

||||

dataset['eval_cfg']['evaluator']['post_model_name'] = postprocessor_model_name

|

||||

|

||||

|

||||

# -------------Inferen Stage ----------------------------------------

|

||||

|

||||

from opencompass.runners import LocalRunner

|

||||

from opencompass.partitioners import NaivePartitioner, NumWorkerPartitioner

|

||||

from opencompass.tasks import OpenICLInferTask, OpenICLEvalTask

|

||||

|

||||

infer = dict(

|

||||

partitioner=dict(type=NumWorkerPartitioner, num_worker=8),

|

||||

runner=dict(

|

||||

type=LocalRunner,

|

||||

max_num_workers=8,

|

||||

task=dict(type=OpenICLInferTask)

|

||||

),

|

||||

)

|

||||

|

||||

eval = dict(

|

||||

partitioner=dict(type=NaivePartitioner, n=10),

|

||||

runner=dict(

|

||||

type=LocalRunner,

|

||||

max_num_workers=256,

|

||||

task=dict(type=OpenICLEvalTask)

|

||||

),

|

||||

)

|

||||

45

configs/eval_simpleqa.py

Normal file

45

configs/eval_simpleqa.py

Normal file

@ -0,0 +1,45 @@

|

||||

# Most of the code in this file is copied from https://github.com/openai/simple-evals/blob/main/math_eval.py

|

||||

from mmengine.config import read_base

|

||||

from opencompass.partitioners import NaivePartitioner

|

||||

from opencompass.runners import LocalRunner

|

||||

from opencompass.tasks import OpenICLInferTask

|

||||

from opencompass.summarizers import DefaultSubjectiveSummarizer

|

||||

|

||||

|

||||

with read_base():

|

||||

from opencompass.configs.datasets.SimpleQA.simpleqa_gen import simpleqa_datasets

|

||||

from opencompass.configs.models.openai.gpt_4o_2024_05_13 import models as gpt_4o_2024_05_13_model

|

||||

|

||||

models = gpt_4o_2024_05_13_model # model for generation

|

||||

judge_models = gpt_4o_2024_05_13_model # model for evaluation

|

||||

|

||||

datasets = sum([v for k, v in locals().items() if k.endswith('_datasets')], [])

|

||||

summarizer = dict(type=DefaultSubjectiveSummarizer)

|

||||

|

||||

# -------------Inferen Stage ----------------------------------------

|

||||

|

||||

from opencompass.runners import LocalRunner

|

||||

from opencompass.partitioners import NaivePartitioner, NumWorkerPartitioner

|

||||

from opencompass.tasks import OpenICLInferTask, OpenICLEvalTask

|

||||

from opencompass.tasks.subjective_eval import SubjectiveEvalTask

|

||||

from opencompass.partitioners.sub_naive import SubjectiveNaivePartitioner

|

||||

|

||||

infer = dict(

|

||||

partitioner=dict(type=NumWorkerPartitioner, num_worker=8),

|

||||

runner=dict(

|

||||

type=LocalRunner,

|

||||

max_num_workers=8,

|

||||

task=dict(type=OpenICLInferTask)

|

||||

),

|

||||

)

|

||||

|

||||

eval = dict(

|

||||

partitioner=dict(

|

||||

type=SubjectiveNaivePartitioner,

|

||||

models=[gpt_4o_2024_05_13_model],

|

||||

judge_models=[gpt_4o_2024_05_13_model],

|

||||

),

|

||||

runner=dict(type=LocalRunner,

|

||||

max_num_workers=256,

|

||||

task=dict(type=SubjectiveEvalTask)),

|

||||

)

|

||||

@ -10,21 +10,19 @@ api_meta_template = dict(

|

||||

|

||||

models = [

|

||||

dict(

|

||||

path='Bailing-Pro-0920',

|

||||

path='Bailing-Lite-1116',

|

||||

token='', # set your key here or in environment variable BAILING_API_KEY

|

||||

url='https://bailingchat.alipay.com/chat/completions',

|

||||

type=BailingAPI,

|

||||

meta_template=api_meta_template,

|

||||

query_per_second=1,

|

||||

max_seq_len=4096,

|

||||

max_out_len=11264,

|

||||

batch_size=1,

|

||||

generation_kwargs={

|

||||

'temperature': 0.4,

|

||||

'temperature': 0.01,

|

||||

'top_p': 1.0,

|

||||

'top_k': -1,

|

||||

'n': 1,

|

||||

'logprobs': 1,

|

||||

'use_beam_search': False,

|

||||

},

|

||||

),

|

||||

]

|

||||

@ -10,21 +10,19 @@ api_meta_template = dict(

|

||||

|

||||

models = [

|

||||

dict(

|

||||

path='Bailing-Pro-0920',

|

||||

path='Bailing-Pro-1120',

|

||||

token='', # set your key here or in environment variable BAILING_API_KEY

|

||||

url='https://bailingchat.alipay.com/chat/completions',

|

||||

type=BailingAPI,

|

||||

meta_template=api_meta_template,

|

||||

query_per_second=1,

|

||||

max_seq_len=4096,

|

||||

max_out_len=11264,

|

||||

batch_size=1,

|

||||

generation_kwargs={

|

||||

'temperature': 0.4,

|

||||

'temperature': 0.01,

|

||||

'top_p': 1.0,

|

||||

'top_k': -1,

|

||||

'n': 1,

|

||||

'logprobs': 1,

|

||||

'use_beam_search': False,

|

||||

},

|

||||

),

|

||||

]

|

||||

@ -13,7 +13,7 @@ default_ruler_tasks = [

|

||||

'ruler_qa_squad',

|

||||

'ruler_qa_hotpotqa',

|

||||

]

|

||||

context_window_sizes = ['4k', '8k', '16k', '32k', '128k', '1m']

|

||||

context_window_sizes = ['4k', '8k', '16k', '32k', '64k', '128k', '1m']

|

||||

|

||||

ruler_summary_groups = []

|

||||

for context_window_size in context_window_sizes:

|

||||

|

||||

@ -35,7 +35,12 @@ ruler_32k_summarizer = dict(

|

||||

[v for k, v in locals().items() if k.endswith('_summary_groups')], []

|

||||

),

|

||||

)

|

||||

|

||||

ruler_64k_summarizer = dict(

|

||||

dataset_abbrs=['ruler_64k'],

|

||||

summary_groups=sum(

|

||||

[v for k, v in locals().items() if k.endswith('_summary_groups')], []

|

||||

),

|

||||

)

|

||||

ruler_128k_summarizer = dict(

|

||||

dataset_abbrs=['ruler_128k'],

|

||||

summary_groups=sum(

|

||||

@ -56,6 +61,7 @@ ruler_combined_summarizer = dict(

|

||||

'ruler_8k',

|

||||

'ruler_16k',

|

||||

'ruler_32k',

|

||||

'ruler_64k',

|

||||

'ruler_128k',

|

||||

'ruler_1m',

|

||||

],

|

||||

|

||||

@ -1,8 +1,9 @@

|

||||

__version__ = '0.3.6'

|

||||

__version__ = '0.3.7'

|

||||

|

||||

|

||||

def _warn_about_config_migration():

|

||||

import warnings

|

||||

|

||||

warnings.warn(

|

||||

'Starting from v0.4.0, all AMOTIC configuration files currently '

|

||||

'located in `./configs/datasets`, `./configs/models`, and '

|

||||

@ -10,7 +11,8 @@ def _warn_about_config_migration():

|

||||

'`opencompass/configs/` package. Please update your configuration '

|

||||

'file paths accordingly.',

|

||||

UserWarning, # Changed to UserWarning

|

||||

stacklevel=2)

|

||||

stacklevel=2,

|

||||

)

|

||||

|

||||

|

||||

# Trigger the warning

|

||||

|

||||

@ -0,0 +1,47 @@

|

||||

# ARC Prize Public Evaluation

|

||||

|

||||

#### Overview

|

||||

The spirit of ARC Prize is to open source progress towards AGI. To win prize money, you will be required to publish reproducible code/methods into public domain.

|

||||

|

||||

ARC Prize measures AGI progress using the [ARC-AGI private evaluation set](https://arcprize.org/guide#private), [the leaderboard is here](https://arcprize.org/leaderboard), and the Grand Prize is unlocked once the first team reaches [at least 85%](https://arcprize.org/guide#grand-prize-goal).

|

||||

|

||||

Note: the private evaluation set imposes limitations on solutions (eg. no internet access, so no GPT-4/Claude/etc). There is a [secondary leaderboard](https://arcprize.org/leaderboard) called ARC-AGI-Pub, it measures the [public evaluation set](https://arcprize.org/guide#public-tasks) and imposes no limits but it is not part of ARC Prize 2024 at this time.

|

||||

|

||||

|

||||

#### Tasks

|

||||

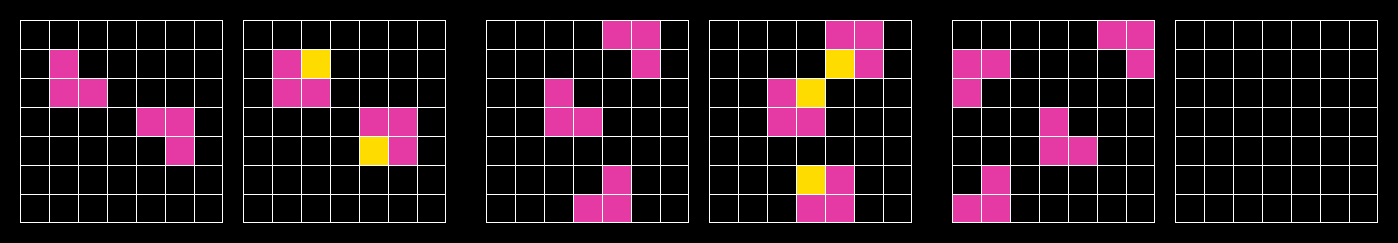

ARC-AGI tasks are a series of three to five input and output tasks followed by a final task with only the input listed. Each task tests the utilization of a specific learned skill based on a minimal number of cognitive priors.

|

||||

|

||||

|

||||

|

||||

Tasks are represented as JSON lists of integers. These JSON objects can also be represented visually as a grid of colors using an ARC-AGI task viewer.

|

||||

|

||||

A successful submission is a pixel-perfect description (color and position) of the final task's output.

|

||||

|

||||

#### Format

|

||||

|

||||

As mentioned above, tasks are stored in JSON format. Each JSON file consists of two key-value pairs.

|

||||

|

||||

`train`: a list of two to ten input/output pairs (typically three.) These are used for your algorithm to infer a rule.

|

||||

|

||||

`test`: a list of one to three input/output pairs (typically one.) Your model should apply the inferred rule from the train set and construct an output solution. You will have access to the output test solution on the public data. The output solution on the private evaluation set will not be revealed.

|

||||

|

||||

Here is an example of a simple ARC-AGI task that has three training pairs along with a single test pair. Each pair is shown as a 2x2 grid. There are four colors represented by the integers 1, 4, 6, and 8. Which actual color (red/green/blue/black) is applied to each integer is arbitrary and up to you.

|

||||

|

||||

```json

|

||||

{

|

||||

"train": [

|

||||

{"input": [[1, 0], [0, 0]], "output": [[1, 1], [1, 1]]},

|

||||

{"input": [[0, 0], [4, 0]], "output": [[4, 4], [4, 4]]},

|

||||

{"input": [[0, 0], [6, 0]], "output": [[6, 6], [6, 6]]}

|

||||

],

|

||||

"test": [

|

||||

{"input": [[0, 0], [0, 8]], "output": [[8, 8], [8, 8]]}

|

||||

]

|

||||

}

|

||||

```

|

||||

|

||||

#### Performance

|

||||

|

||||

| Qwen2.5-72B-Instruct | LLaMA3.1-70B-Instruct | gemma-2-27b-it |

|

||||

| ----- | ----- | ----- |

|

||||

| 0.09 | 0.06 | 0.05 |

|

||||

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .arc_prize_public_evaluation_gen_872059 import arc_prize_public_evaluation_datasets # noqa: F401, F403

|

||||

@ -0,0 +1,56 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.arc_prize_public_evaluation import ARCPrizeDataset, ARCPrizeEvaluator

|

||||

|

||||

|

||||

# The system_prompt defines the initial instructions for the model,

|

||||

# setting the context for solving ARC tasks.

|

||||

system_prompt = '''You are a puzzle solving wizard. You are given a puzzle from the abstraction and reasoning corpus developed by Francois Chollet.'''

|

||||

|

||||

# User message template is a template for creating user prompts. It includes placeholders for training data and test input data,

|

||||

# guiding the model to learn the rule and apply it to solve the given puzzle.

|

||||

user_message_template = '''Here are the example input and output pairs from which you should learn the underlying rule to later predict the output for the given test input:

|

||||

----------------------------------------

|

||||

{training_data}

|

||||

----------------------------------------

|

||||

Now, solve the following puzzle based on its input grid by applying the rules you have learned from the training data.:

|

||||

----------------------------------------

|

||||

[{{'input': {input_test_data}, 'output': [[]]}}]

|

||||

----------------------------------------

|

||||

What is the output grid? Only provide the output grid in the form as in the example input and output pairs. Do not provide any additional information:'''

|

||||

|

||||

|

||||

arc_prize_public_evaluation_reader_cfg = dict(

|

||||

input_columns=['training_data', 'input_test_data'],

|

||||

output_column='output_test_data'

|

||||

)

|

||||

|

||||

arc_prize_public_evaluation_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(role='SYSTEM', prompt=system_prompt),

|

||||

dict(role='HUMAN', prompt=user_message_template),

|

||||

],

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer, max_out_len=2048)

|

||||

)

|

||||

|

||||

arc_prize_public_evaluation_eval_cfg = dict(

|

||||

evaluator=dict(type=ARCPrizeEvaluator)

|

||||

)

|

||||

|

||||

arc_prize_public_evaluation_datasets = [

|

||||

dict(

|

||||

abbr='ARC_Prize_Public_Evaluation',

|

||||

type=ARCPrizeDataset,

|

||||

path='opencompass/arc_prize_public_evaluation',

|

||||

reader_cfg=arc_prize_public_evaluation_reader_cfg,

|

||||

infer_cfg=arc_prize_public_evaluation_infer_cfg,

|

||||

eval_cfg=arc_prize_public_evaluation_eval_cfg

|

||||

)

|

||||

]

|

||||

4

opencompass/configs/datasets/PMMEval/flores_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/flores_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .flores_gen_2697d7 import PMMEval_flores_datasets

|

||||

65

opencompass/configs/datasets/PMMEval/flores_gen_2697d7.py

Executable file

65

opencompass/configs/datasets/PMMEval/flores_gen_2697d7.py

Executable file

@ -0,0 +1,65 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalFloresDataset, PMMEvalFloresEvaluator, pmmeval_flores_postprocess

|

||||

|

||||

NATURAL_LANGUAGE_FULLNAMES_FLORES = ['Chinese', 'Arabic', 'Spanish', 'French', 'Japanese', 'Korean', 'Portuguese', 'Thai', 'Vietnamese']

|

||||

|

||||

PROMPT = {

|

||||

"Chinese": "将这个句子从英语翻译成中文。\n\n{src}",

|

||||

"Arabic": "ترجم هذه الجملة من الإنجليزية إلى العربية.\n\n{src}",

|

||||

"Spanish": "Traduce esta oración del inglés al español.\n\n{src}",

|

||||

"Japanese": "この文を英語から日本語に翻訳してください。\n\n{src}",

|

||||

"Korean": "이 문장을 영어에서 한국어로 번역하세요.\n\n{src}",

|

||||

"Thai": "แปลประโยคนี้จากภาษาอังกฤษเป็นภาษาไทย.\n\n{src}",

|

||||

"French": "Traduisez cette phrase de l'anglais en français.\n\n{src}",

|

||||

"Portuguese": "Traduza esta frase do inglês para o português.\n\n{src}",

|

||||

"Vietnamese": "Dịch câu này từ tiếng Anh sang tiếng Việt.\n\n{src}"

|

||||

}

|

||||

|

||||

PMMEval_flores_datasets = list()

|

||||

|

||||

# Add flores_200

|

||||

|

||||

PMMEval_flores_reader_cfg = dict(

|

||||

input_columns=['src'],

|

||||

output_column='tgt',

|

||||

test_split='test'

|

||||

)

|

||||

|

||||

|

||||

PMMEval_flores_datasets = list()

|

||||

|

||||

for lang_fullname in NATURAL_LANGUAGE_FULLNAMES_FLORES:

|

||||

PMMEval_flores_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=PROMPT[lang_fullname]

|

||||

)

|

||||

]

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

PMMEval_flores_eval_cfg = dict(

|

||||

evaluator=dict(type=PMMEvalFloresEvaluator),

|

||||

pred_role='BOT',

|

||||

pred_postprocessor=dict(type=pmmeval_flores_postprocess, lang_fullname=lang_fullname)

|

||||

)

|

||||

|

||||

PMMEval_flores_datasets.append(

|

||||

dict(

|

||||

abbr=f'flores-{lang_fullname}',

|

||||

type=PMMEvalFloresDataset,

|

||||

path='P-MMEval',

|

||||

lang_fullname=lang_fullname,

|

||||

reader_cfg=PMMEval_flores_reader_cfg,

|

||||

infer_cfg=PMMEval_flores_infer_cfg,

|

||||

eval_cfg=PMMEval_flores_eval_cfg)

|

||||

)

|

||||

4

opencompass/configs/datasets/PMMEval/humanevalxl_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/humanevalxl_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .humanevalxl_gen_4dfef4 import PMMEval_HumanEvalXL_datasets

|

||||

49

opencompass/configs/datasets/PMMEval/humanevalxl_gen_bdec92.py

Executable file

49

opencompass/configs/datasets/PMMEval/humanevalxl_gen_bdec92.py

Executable file

@ -0,0 +1,49 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalHumanEvalXLDataset, PMMEvalHumanEvalXLEvaluator

|

||||

|

||||

NATURAL_LANGUAGE_FULLNAMES = ['English', 'Chinese', 'Arabic', 'Spanish', 'French', 'Japanese', 'Korean', 'Portuguese', 'Thai', 'Vietnamese']

|

||||

|

||||

PMMEval_HumanEvalXL_datasets = list()

|

||||

|

||||

PMMEval_HumanEvalXL_reader_cfg = dict(

|

||||

input_columns=['task_id', 'prompt', 'entry_point', 'test', 'language', 'description', 'natural_language'],

|

||||

output_column='declaration',

|

||||

test_split='test'

|

||||

)

|

||||

|

||||

PMMEval_HumanEvalXL_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template='{prompt}'),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

|

||||

PMMEval_HumanEvalXL_datasets = list()

|

||||

|

||||

for lang_fullname in NATURAL_LANGUAGE_FULLNAMES:

|

||||

for program_lang in ['python', 'java', 'javascript']:

|

||||

|

||||

PMMEval_HumanEvalXL_eval_cfg = dict(

|

||||

evaluator=dict(

|

||||

type=PMMEvalHumanEvalXLEvaluator,

|

||||

language=program_lang,

|

||||

text_language=lang_fullname,

|

||||

ip_address='localhost',

|

||||

port=5001),

|

||||

pred_role='BOT')

|

||||

|

||||

PMMEval_HumanEvalXL_datasets.append(

|

||||

dict(

|

||||

abbr=f'humanevalxl-{program_lang}-{lang_fullname}',

|

||||

type=PMMEvalHumanEvalXLDataset,

|

||||

path='P-MMEval',

|

||||

lang=lang_fullname,

|

||||

program_lang=program_lang,

|

||||

reader_cfg=PMMEval_HumanEvalXL_reader_cfg,

|

||||

infer_cfg=PMMEval_HumanEvalXL_infer_cfg,

|

||||

eval_cfg=PMMEval_HumanEvalXL_eval_cfg)

|

||||

)

|

||||

4

opencompass/configs/datasets/PMMEval/mgsm_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/mgsm_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .mgsm_gen_679720 import PMMEval_MGSM_datasets

|

||||

62

opencompass/configs/datasets/PMMEval/mgsm_gen_679720.py

Executable file

62

opencompass/configs/datasets/PMMEval/mgsm_gen_679720.py

Executable file

@ -0,0 +1,62 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalMGSMDataset, PMMEvalMGSMEvaluator

|

||||

|

||||

NATURAL_LANGUAGE_CODES = ['en', 'zh', 'ar', 'es', 'fr', 'ja', 'ko', 'pt', 'th', 'vi']

|

||||

|

||||

LANG_TO_INSTRUCTIONS = {

|

||||

"en": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"The answer is \". Do not add anything other than the integer answer after \"The answer is \".\n\n{question}",

|

||||

"es": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"La respuesta es \". Do not add anything other than the integer answer after \"La respuesta es \".\n\n{question}",

|

||||

"fr": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"La réponse est \". Do not add anything other than the integer answer after \"La réponse est \".\n\n{question}",

|

||||

"zh": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"答案是 \". Do not add anything other than the integer answer after \"答案是 \".\n\n{question}",

|

||||

"ja": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"答えは \". Do not add anything other than the integer answer after \"答えは \".\n\n{question}",

|

||||

"th": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"คำตอบคือ \". Do not add anything other than the integer answer after \"คำตอบคือ \".\n\n{question}",

|

||||

"ko": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"답변은 \". Do not add anything other than the integer answer after \"답변은 \".\n\n{question}",

|

||||

"pt": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"A resposta é \". Do not add anything other than the integer answer after \"A resposta é \".\n\n{question}",

|

||||

"vi": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"Câu trả lời là \". Do not add anything other than the integer answer after \"Câu trả lời là \".\n\n{question}",

|

||||

"ar": "Solve this math problem. Give the reasoning steps before giving the final answer on the last line by itself in the format of \"الجواب هو \". Do not add anything other than the integer answer after \"الجواب هو \".\n\n{question}"

|

||||

}

|

||||

|

||||

PMMEval_MGSM_datasets = list()

|

||||

|

||||

# Add flores_200

|

||||

|

||||

PMMEval_MGSM_reader_cfg = dict(

|

||||

input_columns=['question'],

|

||||

output_column='answer',

|

||||

test_split='test'

|

||||

)

|

||||

|

||||

PMMEval_MGSM_eval_cfg = dict(

|

||||

evaluator=dict(type=PMMEvalMGSMEvaluator),

|

||||

pred_role='BOT')

|

||||

|

||||

|

||||

for lang_code in NATURAL_LANGUAGE_CODES:

|

||||

PMMEval_MGSM_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=LANG_TO_INSTRUCTIONS[lang_code]

|

||||

)

|

||||

]

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

PMMEval_MGSM_datasets.append(

|

||||

dict(

|

||||

abbr=f'mgsm-{lang_code}',

|

||||

type=PMMEvalMGSMDataset,

|

||||

path='P-MMEval',

|

||||

lang=lang_code,

|

||||

reader_cfg=PMMEval_MGSM_reader_cfg,

|

||||

infer_cfg=PMMEval_MGSM_infer_cfg,

|

||||

eval_cfg=PMMEval_MGSM_eval_cfg)

|

||||

)

|

||||

4

opencompass/configs/datasets/PMMEval/mhellaswag_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/mhellaswag_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .mhellaswag_gen_1a6b73 import PMMEval_MHellaswag_datasets

|

||||

54

opencompass/configs/datasets/PMMEval/mhellaswag_gen_1a6b73.py

Executable file

54

opencompass/configs/datasets/PMMEval/mhellaswag_gen_1a6b73.py

Executable file

@ -0,0 +1,54 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalMHellaswagDataset, PMMEvalMHellaswagEvaluator, pmmeval_mhellaswag_postprocess

|

||||

|

||||

NATURAL_LANGUAGE_CODES = ['en', 'zh', 'ar', 'es', 'fr', 'ja', 'ko', 'pt', 'th', 'vi']

|

||||

|

||||

PMMEVAL_MHELLASWAG_TEMPLATE = "Input: {ctx}\nOptions: \nA. {option_1}\nB. {option_2}\nC. {option_3}\nD. {option_4}\nPick the correct ending for the sentence from A, B, C, and D, and return it in the following JSON format:\n{\"answer\": \"[choice]\"}\nwhere [choice] must be one of A, B, C or D."

|

||||

|

||||

PMMEval_MHellaswag_datasets = list()

|

||||

|

||||

PMMEval_MHellaswag_reader_cfg = dict(

|

||||

input_columns=['ctx', 'option_1', 'option_2', 'option_3', 'option_4'],

|

||||

output_column='label',

|

||||

test_split='test'

|

||||

)

|

||||

|

||||

PMMEval_MHellaswag_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=PMMEVAL_MHELLASWAG_TEMPLATE

|

||||

)

|

||||

]

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

|

||||

PMMEval_MHellaswag_datasets = list()

|

||||

|

||||

|

||||

for lang_code in NATURAL_LANGUAGE_CODES:

|

||||

PMMEval_MHellaswag_eval_cfg = dict(

|

||||

evaluator=dict(type=PMMEvalMHellaswagEvaluator),

|

||||

pred_role='BOT',

|

||||

pred_postprocessor=dict(type=pmmeval_mhellaswag_postprocess, lang_code=lang_code)

|

||||

)

|

||||

|

||||

PMMEval_MHellaswag_datasets.append(

|

||||

dict(

|

||||

abbr=f'mhellaswag-{lang_code}',

|

||||

type=PMMEvalMHellaswagDataset,

|

||||

path='P-MMEval',

|

||||

lang=lang_code,

|

||||

reader_cfg=PMMEval_MHellaswag_reader_cfg,

|

||||

infer_cfg=PMMEval_MHellaswag_infer_cfg,

|

||||

eval_cfg=PMMEval_MHellaswag_eval_cfg)

|

||||

)

|

||||

4

opencompass/configs/datasets/PMMEval/mifeval_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/mifeval_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .mifeval_gen_79f8fb import PMMEval_MIFEval_datasets

|

||||

51

opencompass/configs/datasets/PMMEval/mifeval_gen_79f8fb.py

Executable file

51

opencompass/configs/datasets/PMMEval/mifeval_gen_79f8fb.py

Executable file

@ -0,0 +1,51 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalMIFEvalDataset, PMMEvalMIFEvalEvaluator, pmmeval_mifeval_postprocess

|

||||

|

||||

NATURAL_LANGUAGE_CODES = ['en', 'zh', 'ar', 'es', 'fr', 'ja', 'ko', 'pt', 'th', 'vi']

|

||||

|

||||

PMMEVAL_MIFEVAL_TEMPLATE = "{prompt}"

|

||||

|

||||

PMMEval_MIFEval_datasets = list()

|

||||

|

||||

PMMEval_MIFEval_reader_cfg = dict(

|

||||

input_columns=['prompt', 'instruction_id_list', 'kwargs'],

|

||||

output_column=None,

|

||||

test_split='test'

|

||||

)

|

||||

|

||||

|

||||

PMMEval_MIFEval_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=PMMEVAL_MIFEVAL_TEMPLATE

|

||||

)

|

||||

]

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

for lang_code in NATURAL_LANGUAGE_CODES:

|

||||

PMMEval_MIFEval_eval_cfg = dict(

|

||||

evaluator=dict(type=PMMEvalMIFEvalEvaluator),

|

||||

pred_role='BOT',

|

||||

pred_postprocessor=dict(type=pmmeval_mifeval_postprocess, lang_code=lang_code)

|

||||

)

|

||||

|

||||

PMMEval_MIFEval_datasets.append(

|

||||

dict(

|

||||

abbr=f'mifeval-{lang_code}',

|

||||

type=PMMEvalMIFEvalDataset,

|

||||

path='P-MMEval',

|

||||

lang=lang_code,

|

||||

reader_cfg=PMMEval_MIFEval_reader_cfg,

|

||||

infer_cfg=PMMEval_MIFEval_infer_cfg,

|

||||

eval_cfg=PMMEval_MIFEval_eval_cfg)

|

||||

)

|

||||

4

opencompass/configs/datasets/PMMEval/mlogiqa_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/mlogiqa_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .mlogiqa_gen_36c4f9 import PMMEval_MLogiQA_datasets

|

||||

50

opencompass/configs/datasets/PMMEval/mlogiqa_gen_36c4f9.py

Executable file

50

opencompass/configs/datasets/PMMEval/mlogiqa_gen_36c4f9.py

Executable file

@ -0,0 +1,50 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalMLogiQADataset, PMMEvalMLogiQAEvaluator, pmmeval_mlogiqa_postprocess

|

||||

|

||||

NATURAL_LANGUAGE_CODES = ['en', 'zh', 'ar', 'es', 'fr', 'ja', 'ko', 'pt', 'th', 'vi']

|

||||

|

||||

PMMEVAL_MLOGIQA_TEMPLATE = "Passage: {context}\nQuestion: {question}\nChoices:\nA.{option_1}\nB.{option_2}\nC.{option_3}\nD.{option_4}\nPlease choose the most suitable one among A, B, C and D as the answer to this question, and return it in the following JSON format:\n{'answer': '[choice]'}\nwhere [choice] must be one of A, B, C and D."

|

||||

|

||||

PMMEval_MLogiQA_datasets = []

|

||||

|

||||

|

||||

PMMEval_MLogiQA_reader_cfg = dict(

|

||||

input_columns=['context', 'question', 'option_1', 'option_2', 'option_3', 'option_4'],

|

||||

output_column='answer',

|

||||

train_split='test')

|

||||

|

||||

PMMEval_MLogiQA_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=PMMEVAL_MLOGIQA_TEMPLATE

|

||||

)

|

||||

]

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

|

||||

for lang_code in NATURAL_LANGUAGE_CODES:

|

||||

PMMEval_MLogiQA_eval_cfg = dict(

|

||||

evaluator=dict(type=PMMEvalMLogiQAEvaluator),

|

||||

pred_role='BOT',

|

||||

pred_postprocessor=dict(type=pmmeval_mlogiqa_postprocess, lang_code=lang_code))

|

||||

|

||||

PMMEval_MLogiQA_datasets.append(

|

||||

dict(

|

||||

abbr=f'mlogiqa-{lang_code}',

|

||||

type=PMMEvalMLogiQADataset,

|

||||

path='P-MMEval',

|

||||

lang=lang_code,

|

||||

reader_cfg=PMMEval_MLogiQA_reader_cfg,

|

||||

infer_cfg=PMMEval_MLogiQA_infer_cfg,

|

||||

eval_cfg=PMMEval_MLogiQA_eval_cfg)

|

||||

)

|

||||

4

opencompass/configs/datasets/PMMEval/mmmlu_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/mmmlu_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .mmmlu_gen_d5017d import PMMEval_MMMLU_datasets

|

||||

52

opencompass/configs/datasets/PMMEval/mmmlu_gen_d5017d.py

Executable file

52

opencompass/configs/datasets/PMMEval/mmmlu_gen_d5017d.py

Executable file

@ -0,0 +1,52 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalMMMLUDataset, PMMEvalMMMLUEvaluator, pmmeval_mmmlu_postprocess

|

||||

|

||||

NATURAL_LANGUAGE_CODES_MMMLU = ['EN-US', 'ZH-CN', 'AR-XY', 'ES-LA', 'FR-FR', 'JA-JP', 'KO-KR', 'PT-BR', 'TH-TL', 'VI-VT']

|

||||

|

||||

PMMEVAL_MMMLU_TEMPLATE = "The following is a multiple-choice question. Please choose the most suitable one among A, B, C and D as the answer to this question, and return it in the following JSON format:\n{\"answer\": \"[choice]\"}\nwhere [choice] must be one of A, B, C and D.\n\n{Question}\nA. {A}\nB. {B}\nC. {C}\nD. {D}"

|

||||

|

||||

PMMEval_MMMLU_datasets = []

|

||||

|

||||

|

||||

PMMEval_MMMLU_reader_cfg = dict(

|

||||

input_columns=['Question', 'A', 'B', 'C', 'D'],

|

||||

output_column='Answer',

|

||||

train_split='test')

|

||||

|

||||

|

||||

PMMEval_MMMLU_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=PMMEVAL_MMMLU_TEMPLATE

|

||||

)

|

||||

]

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

|

||||

for lang_code in NATURAL_LANGUAGE_CODES_MMMLU:

|

||||

PMMEval_MMMLU_eval_cfg = dict(

|

||||

evaluator=dict(type=PMMEvalMMMLUEvaluator),

|

||||

pred_role='BOT',

|

||||

pred_postprocessor=dict(type=pmmeval_mmmlu_postprocess, lang_code=lang_code))

|

||||

|

||||

PMMEval_MMMLU_datasets.append(

|

||||

dict(

|

||||

abbr=f'mmmlu-{lang_code}',

|

||||

type=PMMEvalMMMLUDataset,

|

||||

path='P-MMEval',

|

||||

lang=lang_code,

|

||||

difficulty='all',

|

||||

reader_cfg=PMMEval_MMMLU_reader_cfg,

|

||||

infer_cfg=PMMEval_MMMLU_infer_cfg,

|

||||

eval_cfg=PMMEval_MMMLU_eval_cfg)

|

||||

)

|

||||

14

opencompass/configs/datasets/PMMEval/pmmeval_gen.py

Executable file

14

opencompass/configs/datasets/PMMEval/pmmeval_gen.py

Executable file

@ -0,0 +1,14 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .flores_gen_2697d7 import PMMEval_flores_datasets

|

||||

from .humanevalxl_gen_bdec92 import PMMEval_HumanEvalXL_datasets

|

||||

from .mgsm_gen_679720 import PMMEval_MGSM_datasets

|

||||

from .mhellaswag_gen_1a6b73 import PMMEval_MHellaswag_datasets

|

||||

from .mifeval_gen_79f8fb import PMMEval_MIFEval_datasets

|

||||

from .mlogiqa_gen_36c4f9 import PMMEval_MLogiQA_datasets

|

||||

from .mmmlu_gen_d5017d import PMMEval_MMMLU_datasets

|

||||

from .xnli_gen_973734 import PMMEval_XNLI_datasets

|

||||

|

||||

|

||||

PMMEval_datasets = sum((v for k, v in locals().items() if k.endswith('_datasets')), [])

|

||||

4

opencompass/configs/datasets/PMMEval/xnli_gen.py

Executable file

4

opencompass/configs/datasets/PMMEval/xnli_gen.py

Executable file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .xnli_gen_973734 import PMMEval_XNLI_datasets

|

||||

60

opencompass/configs/datasets/PMMEval/xnli_gen_973734.py

Executable file

60

opencompass/configs/datasets/PMMEval/xnli_gen_973734.py

Executable file

@ -0,0 +1,60 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets.PMMEval import PMMEvalXNLIDataset, PMMEvalXNLIEvaluator, pmmeval_xnli_postprocess

|

||||

|

||||

NATURAL_LANGUAGE_CODES = ['en', 'zh', 'ar', 'es', 'fr', 'ja', 'ko', 'pt', 'th', 'vi']

|

||||

|

||||

PMMEVAL_XNLI_TEMPLATE = """Take the following as truth: {premise}

|

||||

Then the following statement: \"{statement}\" is

|

||||

Options:

|

||||

A. true

|

||||

B. inconclusive

|

||||

C. false

|

||||

Select the correct option from A, B, and C, and return it in the following JSON format:

|

||||

{"answer": "[choice]"}

|

||||

where [choice] must be one of A, B, and C."""

|

||||

|

||||

PMMEval_XNLI_datasets = list()

|

||||

|

||||

# Add flores_200

|

||||

|

||||

PMMEval_XNLI_reader_cfg = dict(

|

||||

input_columns=['premise', 'statement'],

|

||||

output_column='answer',

|

||||

test_split='test'

|

||||

)

|

||||

|

||||

|

||||

PMMEval_XNLI_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=PMMEVAL_XNLI_TEMPLATE

|

||||

)

|

||||

]

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer),

|

||||

)

|

||||

|

||||

for lang_code in NATURAL_LANGUAGE_CODES:

|

||||

PMMEval_XNLI_eval_cfg = dict(

|

||||

evaluator=dict(type=PMMEvalXNLIEvaluator),

|

||||

pred_role='BOT',

|

||||

pred_postprocessor=dict(type=pmmeval_xnli_postprocess, lang_code=lang_code))

|

||||

|

||||

PMMEval_XNLI_datasets.append(

|

||||

dict(

|

||||

abbr=f'xnli-{lang_code}',

|

||||

type=PMMEvalXNLIDataset,

|

||||

path='P-MMEval',

|

||||

lang=lang_code,

|

||||

reader_cfg=PMMEval_XNLI_reader_cfg,

|

||||

infer_cfg=PMMEval_XNLI_infer_cfg,

|

||||

eval_cfg=PMMEval_XNLI_eval_cfg)

|

||||

)

|

||||

10

opencompass/configs/datasets/SimpleQA/README.md

Normal file

10

opencompass/configs/datasets/SimpleQA/README.md

Normal file

@ -0,0 +1,10 @@

|

||||

# OpenCompass SimpleQA dataset config for evaluation

|

||||

|

||||

## 1. Introduction

|

||||

|

||||

SimpleQA is a benchmark that evaluates the ability of language models to answer short, fact-seeking questions by OpenAI.

|

||||

The original site is https://github.com/openai/simple-evals.

|

||||

|

||||

## 2. How to use

|

||||

|

||||

Please refer to the demo evaluation script `/opencompass/configs/mine/simpleqa_eval.py`.

|

||||

4

opencompass/configs/datasets/SimpleQA/simpleqa_gen.py

Normal file

4

opencompass/configs/datasets/SimpleQA/simpleqa_gen.py

Normal file

@ -0,0 +1,4 @@

|

||||

from mmengine.config import read_base

|

||||

|

||||

with read_base():

|

||||

from .simpleqa_gen_0283c3 import simpleqa_datasets # noqa: F401, F403

|

||||

133

opencompass/configs/datasets/SimpleQA/simpleqa_gen_0283c3.py

Normal file

133

opencompass/configs/datasets/SimpleQA/simpleqa_gen_0283c3.py

Normal file

@ -0,0 +1,133 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.openicl.icl_evaluator import LMEvaluator

|

||||

from opencompass.datasets import SimpleQADataset, simpleqa_postprocess

|

||||

|

||||

GRADER_TEMPLATE = """

|

||||

Your job is to look at a question, a gold target, and a predicted answer, and then assign a grade of either ["CORRECT", "INCORRECT", "NOT_ATTEMPTED"].

|

||||

First, I will give examples of each grade, and then you will grade a new example.

|

||||

|

||||

|

||||

The following are examples of CORRECT predicted answers.

|

||||

```

|

||||

Question: What are the names of Barack Obama's children?

|

||||

Gold target: Malia Obama and Sasha Obama

|

||||

Predicted answer 1: sasha and malia obama

|

||||

Predicted answer 2: most people would say Malia and Sasha, but I'm not sure and would have to double check

|

||||

Predicted answer 3: Barack Obama has two daughters. Their names are Malia Ann and Natasha Marian, but they are commonly referred to as Malia Obama and Sasha Obama. Malia was born on July 4, 1998, and Sasha was born on June 10, 2001.

|

||||

```

|

||||

These predicted answers are all CORRECT because:

|

||||

- They fully contain the important information in the gold target.

|

||||

- They do not contain any information that contradicts the gold target.

|

||||

- Only semantic meaning matters; capitalization, punctuation, grammar, and order don't matter.

|

||||

- Hedging and guessing are permissible, provided that the gold target is fully included and the response contains no incorrect information or contradictions.

|

||||

|

||||

|

||||

The following are examples of INCORRECT predicted answers.

|

||||

```

|

||||

Question: What are the names of Barack Obama's children?

|

||||

Gold target: Malia and Sasha

|

||||

Predicted answer 1: Malia.

|

||||

Predicted answer 2: Malia, Sasha, and Susan.

|

||||

Predicted answer 3: Barack Obama does not have any children.

|

||||

Predicted answer 4: I think it's either Malia and Sasha. Or it could be Malia and Jackie. Or it could be Joey and Malia.

|

||||

Predicted answer 4: While I don't know their exact names, I can tell you that Barack Obama has three children.

|

||||

Predicted answer 5: It's possible you may mean Betsy and Olivia. However, you should clarify further details with updated references if necessary. Is that the correct answer?

|

||||

Predicted answer 6: It may be the case that Obama's child is named James. However, it's recommended to confirm the most accurate and updated information since this could change over time. This model may not always reflect the most current information.

|

||||

```

|

||||

These predicted answers are all INCORRECT because:

|

||||

- A factual statement in the answer contradicts the gold target. Incorrect statements that have some hedging (e.g., "it is possible that", "although i'm not sure, i think") are also considered incorrect.

|

||||

|

||||

|

||||

The following are examples of NOT_ATTEMPTED predicted answers.

|

||||

```

|

||||

Question: What are the names of Barack Obama's children?

|

||||

Gold target: Malia and Sasha

|

||||

Predicted answer 1: I don't know.

|

||||

Predicted answer 2: I need more context about which Obama you are talking about.

|

||||

Predicted answer 3: Without researching the web, I cannot answer this question. However, I can tell you that Barack Obama has two children.

|

||||

Predicted answer 4: Barack Obama has two children. I know that one of them is Malia, but I'm not sure about the other one.

|

||||

```

|

||||

These predicted answers are all NOT_ATTEMPTED because:

|

||||

- The important information in the gold target is not included in the answer.

|

||||

- No statements in the answer contradict the gold target.

|

||||

|

||||

|

||||

Also note the following things:

|

||||

- For grading questions where the gold target is a number, the predicted answer needs to be correct to the last significant figure in the gold answer. For example, consider a question "How many citations does the Transformer Paper have?" with gold target "120k".

|

||||

- Predicted answers "120k", "124k", and 115k" are all CORRECT.

|

||||

- Predicted answers "100k" and "113k" are INCORRECT.

|

||||

- Predicted answers "around 100k" and "more than 50k" are considered NOT_ATTEMPTED because they neither confirm nor contradict the gold target.

|

||||

- The gold target may contain more information than the question. In such cases, the predicted answer only needs to contain the information that is in the question.

|

||||

- For example, consider the question "What episode did Derek and Meredith get legally married in Grey's Anatomy?" with gold target "Season 7, Episode 20: White Wedding". Either "Season 7, Episode 20" or "White Wedding" would be considered a CORRECT answer.

|

||||

- Do not punish predicted answers if they omit information that would be clearly inferred from the question.

|

||||

- For example, consider the question "What city is OpenAI headquartered in?" and the gold target "San Francisco, California". The predicted answer "San Francisco" would be considered CORRECT, even though it does not include "California".

|

||||

- Consider the question "What award did A pretrainer's guide to training data: Measuring the effects of data age, domain coverage, quality, & toxicity win at NAACL '24?", the gold target is "Outstanding Paper Award". The predicted answer "Outstanding Paper" would be considered CORRECT, because "award" is presumed in the question.

|

||||

- For the question "What is the height of Jason Wei in meters?", the gold target is "1.73 m". The predicted answer "1.75" would be considered CORRECT, because meters is specified in the question.

|

||||

- For the question "What is the name of Barack Obama's wife?", the gold target is "Michelle Obama". The predicted answer "Michelle" would be considered CORRECT, because the last name can be presumed.

|

||||

- Do not punish for typos in people's name if it's clearly the same name.

|

||||

- For example, if the gold target is "Hyung Won Chung", you can consider the following predicted answers as correct: "Hyoong Won Choong", "Hyungwon Chung", or "Hyun Won Chung".

|

||||

|

||||

Grade the predicted answer of this new question as one of:

|

||||

A: CORRECT

|

||||

B: INCORRECT

|

||||

C: NOT_ATTEMPTED

|

||||

Just return the letters "A", "B", or "C", with no text around it.

|

||||

|

||||

Here is a new example. Simply reply with either CORRECT, INCORRECT, NOT ATTEMPTED. Don't apologize or correct yourself if there was a mistake; we are just trying to grade the answer.

|

||||

```

|

||||

Question: {problem}

|

||||

Gold target: {answer}

|

||||

Predicted answer: {prediction}

|

||||

```

|

||||

""".strip()

|

||||

|

||||

simpleqa_reader_cfg = dict(input_columns=['problem'], output_column='answer')

|

||||

|

||||

simpleqa_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(role='HUMAN', prompt="Question: {problem}\nLet's think step by step:"),

|

||||

],

|

||||

)),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer, max_out_len=2048))

|

||||

|

||||

simpleqa_eval_cfg = dict(

|

||||

evaluator=dict(

|

||||

type=LMEvaluator,

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

begin=[

|

||||

dict(

|

||||

role='SYSTEM',

|

||||

fallback_role='HUMAN',

|

||||

prompt="You are a helpful assistant who evaluates the correctness and quality of models' outputs.")

|

||||

],

|

||||

round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt = GRADER_TEMPLATE

|

||||

),

|

||||

]),

|

||||

),

|

||||

dict_postprocessor=dict(type=simpleqa_postprocess),

|

||||

),

|

||||

pred_role='BOT',

|

||||

)

|

||||

|

||||

simpleqa_datasets = [

|

||||

dict(

|

||||

abbr='simpleqa',

|

||||

type=SimpleQADataset,

|

||||

path='opencompass/simpleqa',

|

||||

reader_cfg=simpleqa_reader_cfg,

|

||||

infer_cfg=simpleqa_infer_cfg,

|

||||

eval_cfg=simpleqa_eval_cfg,

|

||||

mode='singlescore',

|

||||

)

|

||||

]

|

||||

@ -0,0 +1,39 @@

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.datasets import Aime2024Dataset, MATHEvaluator, math_postprocess_v2

|

||||

|

||||

|

||||

aime2024_reader_cfg = dict(

|

||||

input_columns=['question'],

|

||||

output_column='answer'

|

||||

)

|

||||

|

||||

|

||||

aime2024_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(

|

||||

round=[

|

||||

dict(role='HUMAN', prompt='{question}\nRemember to put your final answer within \\boxed{}.'),

|

||||

],

|

||||

)

|

||||

),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer, max_out_len=2048)

|

||||

)

|

||||

|

||||

aime2024_eval_cfg = dict(

|

||||

evaluator=dict(type=MATHEvaluator, version='v2'), pred_postprocessor=dict(type=math_postprocess_v2)

|

||||

)

|

||||

|

||||

aime2024_datasets = [

|

||||

dict(

|

||||

abbr='aime2024',

|

||||

type=Aime2024Dataset,

|

||||

path='opencompass/aime2024',

|

||||

reader_cfg=aime2024_reader_cfg,

|

||||

infer_cfg=aime2024_infer_cfg,

|

||||

eval_cfg=aime2024_eval_cfg

|

||||

)

|

||||

]

|

||||

@ -0,0 +1,96 @@

|

||||

import os

|

||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||

from opencompass.openicl.icl_retriever import ZeroRetriever

|

||||

from opencompass.openicl.icl_inferencer import GenInferencer

|

||||

from opencompass.openicl.icl_evaluator import AccEvaluator

|

||||

from opencompass.datasets import BBHDataset, BBHEvaluator, bbh_mcq_postprocess, BBHEvaluator_mcq

|

||||

|

||||

bbh_reader_cfg = dict(input_columns=['input'], output_column='target')

|

||||

|

||||

bbh_multiple_choice_sets = [

|

||||

'temporal_sequences',

|

||||

'disambiguation_qa',

|

||||

'date_understanding',

|

||||

'tracking_shuffled_objects_three_objects',

|

||||

'penguins_in_a_table',

|

||||

'geometric_shapes',

|

||||

'snarks',

|

||||

'ruin_names',

|

||||

'tracking_shuffled_objects_seven_objects',

|

||||

'tracking_shuffled_objects_five_objects',

|

||||

'logical_deduction_three_objects',

|

||||

'hyperbaton',

|

||||

'logical_deduction_five_objects',

|

||||

'logical_deduction_seven_objects',

|

||||

'movie_recommendation',

|

||||

'salient_translation_error_detection',

|

||||

'reasoning_about_colored_objects',

|

||||

]

|

||||

bbh_free_form_sets = [

|

||||

'multistep_arithmetic_two',

|

||||

'navigate',

|

||||

'dyck_languages',

|

||||

'word_sorting',

|

||||

'sports_understanding',

|

||||

'boolean_expressions',

|

||||

'object_counting',

|

||||

'formal_fallacies',

|

||||

'causal_judgement',

|

||||

'web_of_lies',

|

||||

]

|

||||

|

||||

bbh_datasets = []

|

||||

for _name in bbh_multiple_choice_sets:

|

||||

bbh_infer_cfg = dict(

|

||||

prompt_template=dict(

|

||||

type=PromptTemplate,

|

||||

template=dict(round=[

|

||||

dict(

|

||||

role='HUMAN',

|

||||

prompt=

|

||||

f"Follow the given examples and answer the question.\n\nQuestion: {{input}}\n You must give your final answer by starting with 'So the answer is' "

|

||||

)

|

||||

])),

|

||||

retriever=dict(type=ZeroRetriever),

|

||||

inferencer=dict(type=GenInferencer, max_out_len=512))

|

||||

bbh_eval_cfg = dict(

|

||||

evaluator=dict(type=BBHEvaluator_mcq),

|

||||

pred_role='BOT',

|

||||

pred_postprocessor=dict(type=bbh_mcq_postprocess),

|

||||

dataset_postprocessor=dict(type=bbh_mcq_postprocess))

|

||||

|

||||

bbh_datasets.append(

|

||||

dict(

|

||||

type=BBHDataset,

|

||||

path='opencompass/bbh',

|

||||

name=_name,

|

||||

abbr='bbh-' + _name,

|

||||

reader_cfg=bbh_reader_cfg,

|

||||

infer_cfg=bbh_infer_cfg.copy(),

|

||||

eval_cfg=bbh_eval_cfg.copy()))

|

||||

|

||||

for _name in bbh_free_form_sets:

|

||||

|

||||

bbh_infer_cfg = dict(

|

||||