mirror of

https://github.com/open-compass/opencompass.git

synced 2025-05-30 16:03:24 +08:00

[Feat] Update URL (#368)

This commit is contained in:

parent

a05daab911

commit

3871188c89

6

.github/ISSUE_TEMPLATE/1_bug-report.yml

vendored

6

.github/ISSUE_TEMPLATE/1_bug-report.yml

vendored

@ -6,7 +6,7 @@ body:

|

|||||||

- type: markdown

|

- type: markdown

|

||||||

attributes:

|

attributes:

|

||||||

value: |

|

value: |

|

||||||

For general questions or idea discussions, please post it to our [**Forum**](https://github.com/InternLM/opencompass/discussions).

|

For general questions or idea discussions, please post it to our [**Forum**](https://github.com/open-compass/opencompass/discussions).

|

||||||

If you have already identified the reason, we strongly appreciate you creating a new PR according to [the tutorial](https://opencompass.readthedocs.io/en/master/community/CONTRIBUTING.html)!

|

If you have already identified the reason, we strongly appreciate you creating a new PR according to [the tutorial](https://opencompass.readthedocs.io/en/master/community/CONTRIBUTING.html)!

|

||||||

If you need our help, please fill in the following form to help us to identify the bug.

|

If you need our help, please fill in the following form to help us to identify the bug.

|

||||||

|

|

||||||

@ -15,9 +15,9 @@ body:

|

|||||||

label: Prerequisite

|

label: Prerequisite

|

||||||

description: Please check the following items before creating a new issue.

|

description: Please check the following items before creating a new issue.

|

||||||

options:

|

options:

|

||||||

- label: I have searched [Issues](https://github.com/InternLM/opencompass/issues/) and [Discussions](https://github.com/InternLM/opencompass/discussions) but cannot get the expected help.

|

- label: I have searched [Issues](https://github.com/open-compass/opencompass/issues/) and [Discussions](https://github.com/open-compass/opencompass/discussions) but cannot get the expected help.

|

||||||

required: true

|

required: true

|

||||||

- label: The bug has not been fixed in the [latest version](https://github.com/InternLM/opencompass).

|

- label: The bug has not been fixed in the [latest version](https://github.com/open-compass/opencompass).

|

||||||

required: true

|

required: true

|

||||||

|

|

||||||

- type: dropdown

|

- type: dropdown

|

||||||

|

|||||||

2

.github/ISSUE_TEMPLATE/2_feature-request.yml

vendored

2

.github/ISSUE_TEMPLATE/2_feature-request.yml

vendored

@ -6,7 +6,7 @@ body:

|

|||||||

- type: markdown

|

- type: markdown

|

||||||

attributes:

|

attributes:

|

||||||

value: |

|

value: |

|

||||||

For general questions or idea discussions, please post it to our [**Forum**](https://github.com/InternLM/opencompass/discussions).

|

For general questions or idea discussions, please post it to our [**Forum**](https://github.com/open-compass/opencompass/discussions).

|

||||||

If you have already implemented the feature, we strongly appreciate you creating a new PR according to [the tutorial](https://opencompass.readthedocs.io/en/master/community/CONTRIBUTING.html)!

|

If you have already implemented the feature, we strongly appreciate you creating a new PR according to [the tutorial](https://opencompass.readthedocs.io/en/master/community/CONTRIBUTING.html)!

|

||||||

|

|

||||||

- type: textarea

|

- type: textarea

|

||||||

|

|||||||

6

.github/ISSUE_TEMPLATE/3_bug-report_zh.yml

vendored

6

.github/ISSUE_TEMPLATE/3_bug-report_zh.yml

vendored

@ -7,7 +7,7 @@ body:

|

|||||||

attributes:

|

attributes:

|

||||||

value: |

|

value: |

|

||||||

我们推荐使用英语模板 Bug report,以便你的问题帮助更多人。

|

我们推荐使用英语模板 Bug report,以便你的问题帮助更多人。

|

||||||

如果需要询问一般性的问题或者想法,请在我们的[**论坛**](https://github.com/InternLM/opencompass/discussions)讨论。

|

如果需要询问一般性的问题或者想法,请在我们的[**论坛**](https://github.com/open-compass/opencompass/discussions)讨论。

|

||||||

如果你已经有了解决方案,我们非常欢迎你直接创建一个新的 PR 来解决这个问题。创建 PR 的流程可以参考[文档](https://opencompass.readthedocs.io/zh_CN/master/community/CONTRIBUTING.html)。

|

如果你已经有了解决方案,我们非常欢迎你直接创建一个新的 PR 来解决这个问题。创建 PR 的流程可以参考[文档](https://opencompass.readthedocs.io/zh_CN/master/community/CONTRIBUTING.html)。

|

||||||

如果你需要我们的帮助,请填写以下内容帮助我们定位 Bug。

|

如果你需要我们的帮助,请填写以下内容帮助我们定位 Bug。

|

||||||

|

|

||||||

@ -16,9 +16,9 @@ body:

|

|||||||

label: 先决条件

|

label: 先决条件

|

||||||

description: 在创建新问题之前,请检查以下项目。

|

description: 在创建新问题之前,请检查以下项目。

|

||||||

options:

|

options:

|

||||||

- label: 我已经搜索过 [问题](https://github.com/InternLM/opencompass/issues/) 和 [讨论](https://github.com/InternLM/opencompass/discussions) 但未得到预期的帮助。

|

- label: 我已经搜索过 [问题](https://github.com/open-compass/opencompass/issues/) 和 [讨论](https://github.com/open-compass/opencompass/discussions) 但未得到预期的帮助。

|

||||||

required: true

|

required: true

|

||||||

- label: 错误在 [最新版本](https://github.com/InternLM/opencompass) 中尚未被修复。

|

- label: 错误在 [最新版本](https://github.com/open-compass/opencompass) 中尚未被修复。

|

||||||

required: true

|

required: true

|

||||||

|

|

||||||

- type: dropdown

|

- type: dropdown

|

||||||

|

|||||||

@ -7,7 +7,7 @@ body:

|

|||||||

attributes:

|

attributes:

|

||||||

value: |

|

value: |

|

||||||

推荐使用英语模板 Feature request,以便你的问题帮助更多人。

|

推荐使用英语模板 Feature request,以便你的问题帮助更多人。

|

||||||

如果需要询问一般性的问题或者想法,请在我们的[**论坛**](https://github.com/InternLM/opencompass/discussions)讨论。

|

如果需要询问一般性的问题或者想法,请在我们的[**论坛**](https://github.com/open-compass/opencompass/discussions)讨论。

|

||||||

如果你已经实现了该功能,我们非常欢迎你直接创建一个新的 PR 来解决这个问题。创建 PR 的流程可以参考[文档](https://opencompass.readthedocs.io/zh_CN/master/community/CONTRIBUTING.html)。

|

如果你已经实现了该功能,我们非常欢迎你直接创建一个新的 PR 来解决这个问题。创建 PR 的流程可以参考[文档](https://opencompass.readthedocs.io/zh_CN/master/community/CONTRIBUTING.html)。

|

||||||

|

|

||||||

- type: textarea

|

- type: textarea

|

||||||

|

|||||||

2

.github/ISSUE_TEMPLATE/config.yml

vendored

2

.github/ISSUE_TEMPLATE/config.yml

vendored

@ -5,7 +5,7 @@ contact_links:

|

|||||||

url: https://opencompass.readthedocs.io/en/latest/

|

url: https://opencompass.readthedocs.io/en/latest/

|

||||||

about: Check if your question is answered in docs

|

about: Check if your question is answered in docs

|

||||||

- name: 💬 General questions (寻求帮助)

|

- name: 💬 General questions (寻求帮助)

|

||||||

url: https://github.com/InternLM/OpenCompass/discussions

|

url: https://github.com/open-compass/opencompass/discussions

|

||||||

about: Ask general usage questions and discuss with other OpenCompass community members

|

about: Ask general usage questions and discuss with other OpenCompass community members

|

||||||

- name: 🌐 Explore OpenCompass (官网)

|

- name: 🌐 Explore OpenCompass (官网)

|

||||||

url: https://opencompass.org.cn/

|

url: https://opencompass.org.cn/

|

||||||

|

|||||||

12

README.md

12

README.md

@ -4,14 +4,14 @@

|

|||||||

<br />

|

<br />

|

||||||

|

|

||||||

[](https://opencompass.readthedocs.io/en)

|

[](https://opencompass.readthedocs.io/en)

|

||||||

[](https://github.com/InternLM/opencompass/blob/main/LICENSE)

|

[](https://github.com/open-compass/opencompass/blob/main/LICENSE)

|

||||||

|

|

||||||

<!-- [](https://pypi.org/project/opencompass/) -->

|

<!-- [](https://pypi.org/project/opencompass/) -->

|

||||||

|

|

||||||

[🌐Website](https://opencompass.org.cn/) |

|

[🌐Website](https://opencompass.org.cn/) |

|

||||||

[📘Documentation](https://opencompass.readthedocs.io/en/latest/) |

|

[📘Documentation](https://opencompass.readthedocs.io/en/latest/) |

|

||||||

[🛠️Installation](https://opencompass.readthedocs.io/en/latest/get_started.html#installation) |

|

[🛠️Installation](https://opencompass.readthedocs.io/en/latest/get_started.html#installation) |

|

||||||

[🤔Reporting Issues](https://github.com/InternLM/opencompass/issues/new/choose)

|

[🤔Reporting Issues](https://github.com/open-compass/opencompass/issues/new/choose)

|

||||||

|

|

||||||

English | [简体中文](README_zh-CN.md)

|

English | [简体中文](README_zh-CN.md)

|

||||||

|

|

||||||

@ -29,7 +29,7 @@ Just like a compass guides us on our journey, OpenCompass will guide you through

|

|||||||

|

|

||||||

> **🔥 Attention**<br />

|

> **🔥 Attention**<br />

|

||||||

> We launch the OpenCompass Collabration project, welcome to support diverse evaluation benchmarks into OpenCompass!

|

> We launch the OpenCompass Collabration project, welcome to support diverse evaluation benchmarks into OpenCompass!

|

||||||

> Clike [Issue](https://github.com/InternLM/opencompass/issues/248) for more information.

|

> Clike [Issue](https://github.com/open-compass/opencompass/issues/248) for more information.

|

||||||

> Let's work together to build a more powerful OpenCompass toolkit!

|

> Let's work together to build a more powerful OpenCompass toolkit!

|

||||||

|

|

||||||

## 🚀 What's New <a><img width="35" height="20" src="https://user-images.githubusercontent.com/12782558/212848161-5e783dd6-11e8-4fe0-bbba-39ffb77730be.png"></a>

|

## 🚀 What's New <a><img width="35" height="20" src="https://user-images.githubusercontent.com/12782558/212848161-5e783dd6-11e8-4fe0-bbba-39ffb77730be.png"></a>

|

||||||

@ -311,11 +311,11 @@ Below are the steps for quick installation and datasets preparation.

|

|||||||

```Python

|

```Python

|

||||||

conda create --name opencompass python=3.10 pytorch torchvision pytorch-cuda -c nvidia -c pytorch -y

|

conda create --name opencompass python=3.10 pytorch torchvision pytorch-cuda -c nvidia -c pytorch -y

|

||||||

conda activate opencompass

|

conda activate opencompass

|

||||||

git clone https://github.com/InternLM/opencompass opencompass

|

git clone https://github.com/open-compass/opencompass opencompass

|

||||||

cd opencompass

|

cd opencompass

|

||||||

pip install -e .

|

pip install -e .

|

||||||

# Download dataset to data/ folder

|

# Download dataset to data/ folder

|

||||||

wget https://github.com/InternLM/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

wget https://github.com/open-compass/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

||||||

unzip OpenCompassData.zip

|

unzip OpenCompassData.zip

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -389,7 +389,7 @@ Some datasets and prompt implementations are modified from [chain-of-thought-hub

|

|||||||

@misc{2023opencompass,

|

@misc{2023opencompass,

|

||||||

title={OpenCompass: A Universal Evaluation Platform for Foundation Models},

|

title={OpenCompass: A Universal Evaluation Platform for Foundation Models},

|

||||||

author={OpenCompass Contributors},

|

author={OpenCompass Contributors},

|

||||||

howpublished = {\url{https://github.com/InternLM/OpenCompass}},

|

howpublished = {\url{https://github.com/open-compass/opencompass}},

|

||||||

year={2023}

|

year={2023}

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|||||||

@ -4,14 +4,14 @@

|

|||||||

<br />

|

<br />

|

||||||

|

|

||||||

[](https://opencompass.readthedocs.io/zh_CN)

|

[](https://opencompass.readthedocs.io/zh_CN)

|

||||||

[](https://github.com/InternLM/opencompass/blob/main/LICENSE)

|

[](https://github.com/open-compass/opencompass/blob/main/LICENSE)

|

||||||

|

|

||||||

<!-- [](https://pypi.org/project/opencompass/) -->

|

<!-- [](https://pypi.org/project/opencompass/) -->

|

||||||

|

|

||||||

[🌐Website](https://opencompass.org.cn/) |

|

[🌐Website](https://opencompass.org.cn/) |

|

||||||

[📘Documentation](https://opencompass.readthedocs.io/zh_CN/latest/index.html) |

|

[📘Documentation](https://opencompass.readthedocs.io/zh_CN/latest/index.html) |

|

||||||

[🛠️Installation](https://opencompass.readthedocs.io/zh_CN/latest/get_started.html#id1) |

|

[🛠️Installation](https://opencompass.readthedocs.io/zh_CN/latest/get_started.html#id1) |

|

||||||

[🤔Reporting Issues](https://github.com/InternLM/opencompass/issues/new/choose)

|

[🤔Reporting Issues](https://github.com/open-compass/opencompass/issues/new/choose)

|

||||||

|

|

||||||

[English](/README.md) | 简体中文

|

[English](/README.md) | 简体中文

|

||||||

|

|

||||||

@ -29,7 +29,7 @@

|

|||||||

|

|

||||||

> **🔥 注意**<br />

|

> **🔥 注意**<br />

|

||||||

> 我们正式启动 OpenCompass 共建计划,诚邀社区用户为 OpenCompass 提供更具代表性和可信度的客观评测数据集!

|

> 我们正式启动 OpenCompass 共建计划,诚邀社区用户为 OpenCompass 提供更具代表性和可信度的客观评测数据集!

|

||||||

> 点击 [Issue](https://github.com/InternLM/opencompass/issues/248) 获取更多数据集.

|

> 点击 [Issue](https://github.com/open-compass/opencompass/issues/248) 获取更多数据集.

|

||||||

> 让我们携手共进,打造功能强大易用的大模型评测平台!

|

> 让我们携手共进,打造功能强大易用的大模型评测平台!

|

||||||

|

|

||||||

## 🚀 最新进展 <a><img width="35" height="20" src="https://user-images.githubusercontent.com/12782558/212848161-5e783dd6-11e8-4fe0-bbba-39ffb77730be.png"></a>

|

## 🚀 最新进展 <a><img width="35" height="20" src="https://user-images.githubusercontent.com/12782558/212848161-5e783dd6-11e8-4fe0-bbba-39ffb77730be.png"></a>

|

||||||

@ -312,11 +312,11 @@ OpenCompass 是面向大模型评测的一站式平台。其主要特点如下

|

|||||||

```Python

|

```Python

|

||||||

conda create --name opencompass python=3.10 pytorch torchvision pytorch-cuda -c nvidia -c pytorch -y

|

conda create --name opencompass python=3.10 pytorch torchvision pytorch-cuda -c nvidia -c pytorch -y

|

||||||

conda activate opencompass

|

conda activate opencompass

|

||||||

git clone https://github.com/InternLM/opencompass opencompass

|

git clone https://github.com/open-compass/opencompass opencompass

|

||||||

cd opencompass

|

cd opencompass

|

||||||

pip install -e .

|

pip install -e .

|

||||||

# 下载数据集到 data/ 处

|

# 下载数据集到 data/ 处

|

||||||

wget https://github.com/InternLM/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

wget https://github.com/open-compass/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

||||||

unzip OpenCompassData.zip

|

unzip OpenCompassData.zip

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -392,7 +392,7 @@ python run.py --datasets ceval_ppl mmlu_ppl \

|

|||||||

@misc{2023opencompass,

|

@misc{2023opencompass,

|

||||||

title={OpenCompass: A Universal Evaluation Platform for Foundation Models},

|

title={OpenCompass: A Universal Evaluation Platform for Foundation Models},

|

||||||

author={OpenCompass Contributors},

|

author={OpenCompass Contributors},

|

||||||

howpublished = {\url{https://github.com/InternLM/OpenCompass}},

|

howpublished = {\url{https://github.com/open-compass/opencompass}},

|

||||||

year={2023}

|

year={2023}

|

||||||

}

|

}

|

||||||

```

|

```

|

||||||

|

|||||||

@ -39,9 +39,9 @@ When the model inference and code evaluation services are running on the same ho

|

|||||||

|

|

||||||

### Configuration File

|

### Configuration File

|

||||||

|

|

||||||

We provide [the configuration file](https://github.com/InternLM/opencompass/blob/main/configs/eval_codegeex2.py) of using `humanevalx` for evaluation on `codegeex2` as reference.

|

We provide [the configuration file](https://github.com/open-compass/opencompass/blob/main/configs/eval_codegeex2.py) of using `humanevalx` for evaluation on `codegeex2` as reference.

|

||||||

|

|

||||||

The dataset and related post-processing configurations files can be found at this [link](https://github.com/InternLM/opencompass/tree/main/configs/datasets/humanevalx) with attention paid to the `evaluator` field in the humanevalx_eval_cfg_dict.

|

The dataset and related post-processing configurations files can be found at this [link](https://github.com/open-compass/opencompass/tree/main/configs/datasets/humanevalx) with attention paid to the `evaluator` field in the humanevalx_eval_cfg_dict.

|

||||||

|

|

||||||

```python

|

```python

|

||||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||||

|

|||||||

@ -93,7 +93,7 @@ html_theme_options = {

|

|||||||

'menu': [

|

'menu': [

|

||||||

{

|

{

|

||||||

'name': 'GitHub',

|

'name': 'GitHub',

|

||||||

'url': 'https://github.com/InternLM/opencompass'

|

'url': 'https://github.com/open-compass/opencompass'

|

||||||

},

|

},

|

||||||

],

|

],

|

||||||

# Specify the language of shared menu

|

# Specify the language of shared menu

|

||||||

|

|||||||

@ -12,7 +12,7 @@

|

|||||||

2. Install OpenCompass:

|

2. Install OpenCompass:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

git clone https://github.com/InternLM/opencompass.git

|

git clone https://github.com/open-compass/opencompass.git

|

||||||

cd opencompass

|

cd opencompass

|

||||||

pip install -e .

|

pip install -e .

|

||||||

```

|

```

|

||||||

@ -51,7 +51,7 @@

|

|||||||

cd ..

|

cd ..

|

||||||

```

|

```

|

||||||

|

|

||||||

You can find example configs in `configs/models`. ([example](https://github.com/InternLM/opencompass/blob/eb4822a94d624a4e16db03adeb7a59bbd10c2012/configs/models/llama2_7b_chat.py))

|

You can find example configs in `configs/models`. ([example](https://github.com/open-compass/opencompass/blob/eb4822a94d624a4e16db03adeb7a59bbd10c2012/configs/models/llama2_7b_chat.py))

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

@ -66,7 +66,7 @@ Run the following commands to download and place the datasets in the `${OpenComp

|

|||||||

|

|

||||||

```bash

|

```bash

|

||||||

# Run in the OpenCompass directory

|

# Run in the OpenCompass directory

|

||||||

wget https://github.com/InternLM/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

wget https://github.com/open-compass/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

||||||

unzip OpenCompassData.zip

|

unzip OpenCompassData.zip

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -74,10 +74,10 @@ OpenCompass has supported most of the datasets commonly used for performance com

|

|||||||

|

|

||||||

# Quick Start

|

# Quick Start

|

||||||

|

|

||||||

We will demonstrate some basic features of OpenCompass through evaluating pretrained models [OPT-125M](https://huggingface.co/facebook/opt-125m) and [OPT-350M](https://huggingface.co/facebook/opt-350m) on both [SIQA](https://huggingface.co/datasets/social_i_qa) and [Winograd](https://huggingface.co/datasets/winogrande) benchmark tasks with their config file located at [configs/eval_demo.py](https://github.com/InternLM/opencompass/blob/main/configs/eval_demo.py).

|

We will demonstrate some basic features of OpenCompass through evaluating pretrained models [OPT-125M](https://huggingface.co/facebook/opt-125m) and [OPT-350M](https://huggingface.co/facebook/opt-350m) on both [SIQA](https://huggingface.co/datasets/social_i_qa) and [Winograd](https://huggingface.co/datasets/winogrande) benchmark tasks with their config file located at [configs/eval_demo.py](https://github.com/open-compass/opencompass/blob/main/configs/eval_demo.py).

|

||||||

|

|

||||||

Before running this experiment, please make sure you have installed OpenCompass locally and it should run successfully under one _GTX-1660-6G_ GPU.

|

Before running this experiment, please make sure you have installed OpenCompass locally and it should run successfully under one _GTX-1660-6G_ GPU.

|

||||||

For larger parameterized models like Llama-7B, refer to other examples provided in the [configs directory](https://github.com/InternLM/opencompass/tree/main/configs).

|

For larger parameterized models like Llama-7B, refer to other examples provided in the [configs directory](https://github.com/open-compass/opencompass/tree/main/configs).

|

||||||

|

|

||||||

## Configure an Evaluation Task

|

## Configure an Evaluation Task

|

||||||

|

|

||||||

@ -270,7 +270,7 @@ datasets = [*siqa_datasets, *winograd_datasets] # The final config needs t

|

|||||||

|

|

||||||

Dataset configurations are typically of two types: 'ppl' and 'gen', indicating the evaluation method used. Where `ppl` means discriminative evaluation and `gen` means generative evaluation.

|

Dataset configurations are typically of two types: 'ppl' and 'gen', indicating the evaluation method used. Where `ppl` means discriminative evaluation and `gen` means generative evaluation.

|

||||||

|

|

||||||

Moreover, [configs/datasets/collections](https://github.com/InternLM/OpenCompass/blob/main/configs/datasets/collections) houses various dataset collections, making it convenient for comprehensive evaluations. OpenCompass often uses [`base_medium.py`](/configs/datasets/collections/base_medium.py) for full-scale model testing. To replicate results, simply import that file, for example:

|

Moreover, [configs/datasets/collections](https://github.com/open-compass/opencompass/blob/main/configs/datasets/collections) houses various dataset collections, making it convenient for comprehensive evaluations. OpenCompass often uses [`base_medium.py`](/configs/datasets/collections/base_medium.py) for full-scale model testing. To replicate results, simply import that file, for example:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

python run.py --models hf_llama_7b --datasets base_medium

|

python run.py --models hf_llama_7b --datasets base_medium

|

||||||

|

|||||||

@ -43,7 +43,7 @@ Pull requests let you tell others about changes you have pushed to a branch in a

|

|||||||

- When you work on your first PR

|

- When you work on your first PR

|

||||||

|

|

||||||

Fork the OpenCompass repository: click the **fork** button at the top right corner of Github page

|

Fork the OpenCompass repository: click the **fork** button at the top right corner of Github page

|

||||||

|

|

||||||

|

|

||||||

Clone forked repository to local

|

Clone forked repository to local

|

||||||

|

|

||||||

@ -102,7 +102,7 @@ git checkout main -b branchname

|

|||||||

```

|

```

|

||||||

|

|

||||||

- Create a PR

|

- Create a PR

|

||||||

|

|

||||||

|

|

||||||

- Revise PR message template to describe your motivation and modifications made in this PR. You can also link the related issue to the PR manually in the PR message (For more information, checkout the [official guidance](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue)).

|

- Revise PR message template to describe your motivation and modifications made in this PR. You can also link the related issue to the PR manually in the PR message (For more information, checkout the [official guidance](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue)).

|

||||||

|

|

||||||

|

|||||||

@ -4,7 +4,7 @@

|

|||||||

|

|

||||||

During the process of reasoning, CoT (Chain of Thought) method is an efficient way to help LLMs deal complex questions, for example: math problem and relation inference. In OpenCompass, we support multiple types of CoT method.

|

During the process of reasoning, CoT (Chain of Thought) method is an efficient way to help LLMs deal complex questions, for example: math problem and relation inference. In OpenCompass, we support multiple types of CoT method.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 1. Zero Shot CoT

|

## 1. Zero Shot CoT

|

||||||

|

|

||||||

@ -72,7 +72,7 @@ OpenCompass defaults to use argmax for sampling the next token. Therefore, if th

|

|||||||

|

|

||||||

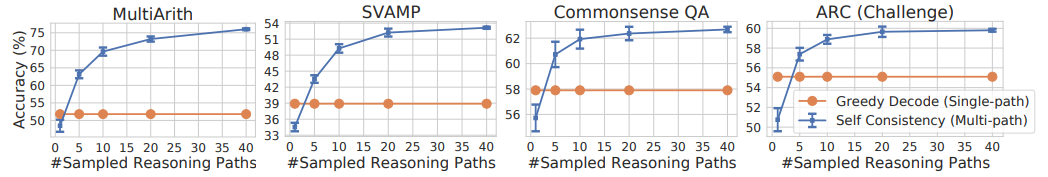

Where `SAMPLE_SIZE` is the number of reasoning paths in Self-Consistency, higher value usually outcome higher performance. The following figure from the original SC paper demonstrates the relation between reasoning paths and performance in several reasoning tasks:

|

Where `SAMPLE_SIZE` is the number of reasoning paths in Self-Consistency, higher value usually outcome higher performance. The following figure from the original SC paper demonstrates the relation between reasoning paths and performance in several reasoning tasks:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

From the figure, it can be seen that in different reasoning tasks, performance tends to improve as the number of reasoning paths increases. However, for some tasks, increasing the number of reasoning paths may reach a limit, and further increasing the number of paths may not bring significant performance improvement. Therefore, it is necessary to conduct experiments and adjustments on specific tasks to find the optimal number of reasoning paths that best suit the task.

|

From the figure, it can be seen that in different reasoning tasks, performance tends to improve as the number of reasoning paths increases. However, for some tasks, increasing the number of reasoning paths may reach a limit, and further increasing the number of paths may not bring significant performance improvement. Therefore, it is necessary to conduct experiments and adjustments on specific tasks to find the optimal number of reasoning paths that best suit the task.

|

||||||

|

|

||||||

|

|||||||

@ -103,7 +103,7 @@ use the PIQA dataset configuration file as an example to demonstrate the meaning

|

|||||||

configuration file. If you do not intend to modify the prompt for model testing or add new datasets, you can

|

configuration file. If you do not intend to modify the prompt for model testing or add new datasets, you can

|

||||||

skip this section.

|

skip this section.

|

||||||

|

|

||||||

The PIQA dataset [configuration file](https://github.com/InternLM/opencompass/blob/main/configs/datasets/piqa/piqa_ppl_1cf9f0.py) is as follows.

|

The PIQA dataset [configuration file](https://github.com/open-compass/opencompass/blob/main/configs/datasets/piqa/piqa_ppl_1cf9f0.py) is as follows.

|

||||||

It is a configuration for evaluating based on perplexity (PPL) and does not use In-Context Learning.

|

It is a configuration for evaluating based on perplexity (PPL) and does not use In-Context Learning.

|

||||||

|

|

||||||

```python

|

```python

|

||||||

|

|||||||

@ -12,7 +12,7 @@ There is also a type of **scoring-type** evaluation task without standard answer

|

|||||||

|

|

||||||

## Supported Evaluation Metrics

|

## Supported Evaluation Metrics

|

||||||

|

|

||||||

Currently, in OpenCompass, commonly used Evaluators are mainly located in the [`opencompass/openicl/icl_evaluator`](https://github.com/InternLM/opencompass/tree/main/opencompass/openicl/icl_evaluator) folder. There are also some dataset-specific indicators that are placed in parts of [`opencompass/datasets`](https://github.com/InternLM/opencompass/tree/main/opencompass/datasets). Below is a summary:

|

Currently, in OpenCompass, commonly used Evaluators are mainly located in the [`opencompass/openicl/icl_evaluator`](https://github.com/open-compass/opencompass/tree/main/opencompass/openicl/icl_evaluator) folder. There are also some dataset-specific indicators that are placed in parts of [`opencompass/datasets`](https://github.com/open-compass/opencompass/tree/main/opencompass/datasets). Below is a summary:

|

||||||

|

|

||||||

| Evaluation Strategy | Evaluation Metrics | Common Postprocessing Method | Datasets |

|

| Evaluation Strategy | Evaluation Metrics | Common Postprocessing Method | Datasets |

|

||||||

| ------------------- | -------------------- | ---------------------------- | -------------------------------------------------------------------- |

|

| ------------------- | -------------------- | ---------------------------- | -------------------------------------------------------------------- |

|

||||||

@ -33,7 +33,7 @@ Currently, in OpenCompass, commonly used Evaluators are mainly located in the [`

|

|||||||

|

|

||||||

The evaluation standard configuration is generally placed in the dataset configuration file, and the final xxdataset_eval_cfg will be passed to `dataset.infer_cfg` as an instantiation parameter.

|

The evaluation standard configuration is generally placed in the dataset configuration file, and the final xxdataset_eval_cfg will be passed to `dataset.infer_cfg` as an instantiation parameter.

|

||||||

|

|

||||||

Below is the definition of `govrepcrs_eval_cfg`, and you can refer to [configs/datasets/govrepcrs](https://github.com/InternLM/opencompass/tree/main/configs/datasets/govrepcrs).

|

Below is the definition of `govrepcrs_eval_cfg`, and you can refer to [configs/datasets/govrepcrs](https://github.com/open-compass/opencompass/tree/main/configs/datasets/govrepcrs).

|

||||||

|

|

||||||

```python

|

```python

|

||||||

from opencompass.openicl.icl_evaluator import BleuEvaluator

|

from opencompass.openicl.icl_evaluator import BleuEvaluator

|

||||||

|

|||||||

@ -39,8 +39,8 @@ telnet your_service_ip_address your_service_port

|

|||||||

|

|

||||||

### 配置文件

|

### 配置文件

|

||||||

|

|

||||||

我们已经提供了 huamaneval-x 在 codegeex2 上评估的\[配置文件\]作为参考(https://github.com/InternLM/opencompass/blob/main/configs/eval_codegeex2.py)。

|

我们已经提供了 huamaneval-x 在 codegeex2 上评估的\[配置文件\]作为参考(https://github.com/open-compass/opencompass/blob/main/configs/eval_codegeex2.py)。

|

||||||

其中数据集以及相关后处理的配置文件为这个[链接](https://github.com/InternLM/opencompass/tree/main/configs/datasets/humanevalx), 需要注意 humanevalx_eval_cfg_dict 中的evaluator 字段。

|

其中数据集以及相关后处理的配置文件为这个[链接](https://github.com/open-compass/opencompass/tree/main/configs/datasets/humanevalx), 需要注意 humanevalx_eval_cfg_dict 中的evaluator 字段。

|

||||||

|

|

||||||

```python

|

```python

|

||||||

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

from opencompass.openicl.icl_prompt_template import PromptTemplate

|

||||||

|

|||||||

@ -93,7 +93,7 @@ html_theme_options = {

|

|||||||

'menu': [

|

'menu': [

|

||||||

{

|

{

|

||||||

'name': 'GitHub',

|

'name': 'GitHub',

|

||||||

'url': 'https://github.com/InternLM/opencompass'

|

'url': 'https://github.com/open-compass/opencompass'

|

||||||

},

|

},

|

||||||

],

|

],

|

||||||

# Specify the language of shared menu

|

# Specify the language of shared menu

|

||||||

|

|||||||

@ -12,7 +12,7 @@

|

|||||||

2. 安装 OpenCompass:

|

2. 安装 OpenCompass:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

git clone https://github.com/InternLM/opencompass.git

|

git clone https://github.com/open-compass/opencompass.git

|

||||||

cd opencompass

|

cd opencompass

|

||||||

pip install -e .

|

pip install -e .

|

||||||

```

|

```

|

||||||

@ -51,7 +51,7 @@

|

|||||||

cd ..

|

cd ..

|

||||||

```

|

```

|

||||||

|

|

||||||

你可以在 `configs/models` 下找到所有 Llama / Llama-2 / Llama-2-chat 模型的配置文件示例。([示例](https://github.com/InternLM/opencompass/blob/eb4822a94d624a4e16db03adeb7a59bbd10c2012/configs/models/llama2_7b_chat.py))

|

你可以在 `configs/models` 下找到所有 Llama / Llama-2 / Llama-2-chat 模型的配置文件示例。([示例](https://github.com/open-compass/opencompass/blob/eb4822a94d624a4e16db03adeb7a59bbd10c2012/configs/models/llama2_7b_chat.py))

|

||||||

|

|

||||||

</details>

|

</details>

|

||||||

|

|

||||||

@ -66,7 +66,7 @@ OpenCompass 支持的数据集主要包括两个部分:

|

|||||||

在 OpenCompass 项目根目录下运行下面命令,将数据集准备至 `${OpenCompass}/data` 目录下:

|

在 OpenCompass 项目根目录下运行下面命令,将数据集准备至 `${OpenCompass}/data` 目录下:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

wget https://github.com/InternLM/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

wget https://github.com/open-compass/opencompass/releases/download/0.1.1/OpenCompassData.zip

|

||||||

unzip OpenCompassData.zip

|

unzip OpenCompassData.zip

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -77,7 +77,7 @@ OpenCompass 已经支持了大多数常用于性能比较的数据集,具体

|

|||||||

我们会以测试 [OPT-125M](https://huggingface.co/facebook/opt-125m) 以及 [OPT-350M](https://huggingface.co/facebook/opt-350m) 预训练基座模型在 [SIQA](https://huggingface.co/datasets/social_i_qa) 和 [Winograd](https://huggingface.co/datasets/winogrande) 上的性能为例,带领你熟悉 OpenCompass 的一些基本功能。

|

我们会以测试 [OPT-125M](https://huggingface.co/facebook/opt-125m) 以及 [OPT-350M](https://huggingface.co/facebook/opt-350m) 预训练基座模型在 [SIQA](https://huggingface.co/datasets/social_i_qa) 和 [Winograd](https://huggingface.co/datasets/winogrande) 上的性能为例,带领你熟悉 OpenCompass 的一些基本功能。

|

||||||

|

|

||||||

运行前确保已经安装了 OpenCompass,本实验可以在单张 _GTX-1660-6G_ 显卡上成功运行。

|

运行前确保已经安装了 OpenCompass,本实验可以在单张 _GTX-1660-6G_ 显卡上成功运行。

|

||||||

更大参数的模型,如 Llama-7B, 可参考 [configs](https://github.com/InternLM/opencompass/tree/main/configs) 中其他例子。

|

更大参数的模型,如 Llama-7B, 可参考 [configs](https://github.com/open-compass/opencompass/tree/main/configs) 中其他例子。

|

||||||

|

|

||||||

## 配置任务

|

## 配置任务

|

||||||

|

|

||||||

@ -268,7 +268,7 @@ datasets = [*siqa_datasets, *winograd_datasets] # 最后 config 需要包

|

|||||||

|

|

||||||

数据集的配置通常为 'ppl' 和 'gen' 两类配置文件,表示使用的评估方式。其中 `ppl` 表示使用判别式评测, `gen` 表示使用生成式评测。

|

数据集的配置通常为 'ppl' 和 'gen' 两类配置文件,表示使用的评估方式。其中 `ppl` 表示使用判别式评测, `gen` 表示使用生成式评测。

|

||||||

|

|

||||||

此外,[configs/datasets/collections](https://github.com/InternLM/OpenCompass/blob/main/configs/datasets/collections) 存放了各类数据集集合,方便做综合评测。OpenCompass 常用 [`base_medium.py`](/configs/datasets/collections/base_medium.py) 对模型进行全量测试。若需要复现结果,直接导入该文件即可。如:

|

此外,[configs/datasets/collections](https://github.com/open-compass/opencompass/blob/main/configs/datasets/collections) 存放了各类数据集集合,方便做综合评测。OpenCompass 常用 [`base_medium.py`](/configs/datasets/collections/base_medium.py) 对模型进行全量测试。若需要复现结果,直接导入该文件即可。如:

|

||||||

|

|

||||||

```bash

|

```bash

|

||||||

python run.py --models hf_llama_7b --datasets base_medium

|

python run.py --models hf_llama_7b --datasets base_medium

|

||||||

|

|||||||

@ -43,7 +43,7 @@

|

|||||||

- 当你第一次提 PR 时

|

- 当你第一次提 PR 时

|

||||||

|

|

||||||

复刻 OpenCompass 原代码库,点击 GitHub 页面右上角的 **Fork** 按钮即可

|

复刻 OpenCompass 原代码库,点击 GitHub 页面右上角的 **Fork** 按钮即可

|

||||||

|

|

||||||

|

|

||||||

克隆复刻的代码库到本地

|

克隆复刻的代码库到本地

|

||||||

|

|

||||||

@ -111,7 +111,7 @@ git checkout main -b branchname

|

|||||||

|

|

||||||

- 创建一个拉取请求

|

- 创建一个拉取请求

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

- 修改拉取请求信息模板,描述修改原因和修改内容。还可以在 PR 描述中,手动关联到相关的议题 (issue),(更多细节,请参考[官方文档](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue))。

|

- 修改拉取请求信息模板,描述修改原因和修改内容。还可以在 PR 描述中,手动关联到相关的议题 (issue),(更多细节,请参考[官方文档](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue))。

|

||||||

|

|

||||||

|

|||||||

@ -4,7 +4,7 @@

|

|||||||

|

|

||||||

CoT(思维链)是帮助大型语言模型解决如数学问题和关系推理问题等复杂问题的有效方式,在OpenCompass中,我们支持多种类型的CoT方法。

|

CoT(思维链)是帮助大型语言模型解决如数学问题和关系推理问题等复杂问题的有效方式,在OpenCompass中,我们支持多种类型的CoT方法。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

## 1. 零样本思维链

|

## 1. 零样本思维链

|

||||||

|

|

||||||

@ -72,7 +72,7 @@ gsm8k_eval_cfg = dict(sc_size=SAMPLE_SIZE)

|

|||||||

|

|

||||||

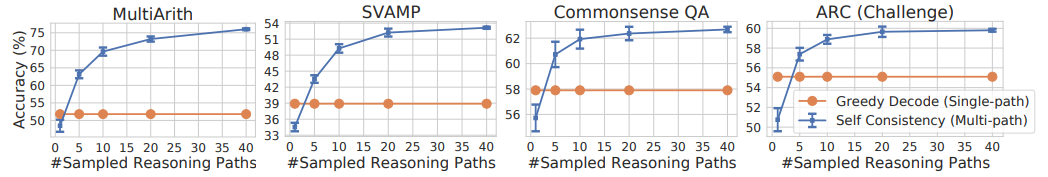

其中 `SAMPLE_SIZE` 是推理路径的数量,较高的值通常会带来更高的性能。SC方法的原论文中展示了不同推理任务间推理路径数量与性能之间的关系:

|

其中 `SAMPLE_SIZE` 是推理路径的数量,较高的值通常会带来更高的性能。SC方法的原论文中展示了不同推理任务间推理路径数量与性能之间的关系:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

从图中可以看出,在不同的推理任务中,随着推理路径数量的增加,性能呈现出增长的趋势。但是,对于某些任务,增加推理路径的数量可能达到一个极限,进一步增加推理路径的数量可能不会带来更多的性能提升。因此,需要在具体任务中进行实验和调整,找到最适合任务的推理路径数量。

|

从图中可以看出,在不同的推理任务中,随着推理路径数量的增加,性能呈现出增长的趋势。但是,对于某些任务,增加推理路径的数量可能达到一个极限,进一步增加推理路径的数量可能不会带来更多的性能提升。因此,需要在具体任务中进行实验和调整,找到最适合任务的推理路径数量。

|

||||||

|

|

||||||

|

|||||||

@ -99,7 +99,7 @@ models = [

|

|||||||

我们会以 PIQA 数据集配置文件为示例,展示数据集配置文件中各个字段的含义。

|

我们会以 PIQA 数据集配置文件为示例,展示数据集配置文件中各个字段的含义。

|

||||||

如果你不打算修改模型测试的 prompt,或者添加新的数据集,则可以跳过这一节的介绍。

|

如果你不打算修改模型测试的 prompt,或者添加新的数据集,则可以跳过这一节的介绍。

|

||||||

|

|

||||||

PIQA 数据集 [配置文件](https://github.com/InternLM/opencompass/blob/main/configs/datasets/piqa/piqa_ppl_1cf9f0.py)

|

PIQA 数据集 [配置文件](https://github.com/open-compass/opencompass/blob/main/configs/datasets/piqa/piqa_ppl_1cf9f0.py)

|

||||||

如下,这是一个基于 PPL(困惑度)进行评测的配置,并且不使用上下文学习方法(In-Context Learning)。

|

如下,这是一个基于 PPL(困惑度)进行评测的配置,并且不使用上下文学习方法(In-Context Learning)。

|

||||||

|

|

||||||

```python

|

```python

|

||||||

|

|||||||

@ -12,7 +12,7 @@

|

|||||||

|

|

||||||

## 已支持评估指标

|

## 已支持评估指标

|

||||||

|

|

||||||

目前 OpenCompass 中,常用的 Evaluator 主要放在 [`opencompass/openicl/icl_evaluator`](https://github.com/InternLM/opencompass/tree/main/opencompass/openicl/icl_evaluator)文件夹下, 还有部分数据集特有指标的放在 [`opencompass/datasets`](https://github.com/InternLM/opencompass/tree/main/opencompass/datasets) 的部分文件中。以下是汇总:

|

目前 OpenCompass 中,常用的 Evaluator 主要放在 [`opencompass/openicl/icl_evaluator`](https://github.com/open-compass/opencompass/tree/main/opencompass/openicl/icl_evaluator)文件夹下, 还有部分数据集特有指标的放在 [`opencompass/datasets`](https://github.com/open-compass/opencompass/tree/main/opencompass/datasets) 的部分文件中。以下是汇总:

|

||||||

|

|

||||||

| 评估指标 | 评估策略 | 常用后处理方式 | 数据集 |

|

| 评估指标 | 评估策略 | 常用后处理方式 | 数据集 |

|

||||||

| ------------------ | -------------------- | --------------------------- | -------------------------------------------------------------------- |

|

| ------------------ | -------------------- | --------------------------- | -------------------------------------------------------------------- |

|

||||||

@ -33,7 +33,7 @@

|

|||||||

|

|

||||||

评估标准配置一般放在数据集配置文件中,最终的 xxdataset_eval_cfg 会传给 `dataset.infer_cfg` 作为实例化的一个参数。

|

评估标准配置一般放在数据集配置文件中,最终的 xxdataset_eval_cfg 会传给 `dataset.infer_cfg` 作为实例化的一个参数。

|

||||||

|

|

||||||

下面是 `govrepcrs_eval_cfg` 的定义, 具体可查看 [configs/datasets/govrepcrs](https://github.com/InternLM/opencompass/tree/main/configs/datasets/govrepcrs)。

|

下面是 `govrepcrs_eval_cfg` 的定义, 具体可查看 [configs/datasets/govrepcrs](https://github.com/open-compass/opencompass/tree/main/configs/datasets/govrepcrs)。

|

||||||

|

|

||||||

```python

|

```python

|

||||||

from opencompass.openicl.icl_evaluator import BleuEvaluator

|

from opencompass.openicl.icl_evaluator import BleuEvaluator

|

||||||

|

|||||||

Loading…

Reference in New Issue

Block a user